Everyone has been talking about how great ChatGPT is at writing. But how much of it is true and what is hyped? Satyen K. Bordoloi tests it to find out.

The greatest fear in everyone’s mind – fed as we are on AI doomsday scenario is that with ChatGPT getting so good at contextualising information, no job is safe from AI. Examples are given of how good it can write, how people are using it to create poems or writing screenplays.

In the last two months that I have been using ChatGPT, curiosity has led me to give the model a test multiple times to see how it does. What I found is a mixed bag. Let me start with what’s good.

I asked ChatGPT to make a few jokes about itself. It volunteered five:

1. Why did ChatGPT cross the road? To get to the other dataset!

2. I tried to teach myself how to tell jokes, but I kept getting stuck in an infinite loop of setup and punchline.

3. People say I’m a great conversationalist, but that’s only because I have millions of things to say and I never get tired!

4. I once tried to make a “yo mama” joke, but then I realized I don’t have a mother…or a sense of humor.

5. I’m like a dictionary, always ready with an answer, but nobody wants to hang out with me at parties.

These may not be laugh riots, but sure are witty and self-parodying. In this department, I give ChatGPT a thumbs up.

Writing Articles

The very first task I gave ChatGPT was to write a 1000-word article on Quantum computing knowing how tough that subject is. As I mentioned in another Sify article where I asked it to describe all it can do and ChatGPT took two days to get it right, the system is not good at counting words. Instead of 1000 words, it gave me 434. Which is fine if the writing were any good.

ChatGPT began: “Quantum computing is a field of computing that uses the principles of quantum mechanics to perform operations on data. It has the potential to revolutionize many fields, including medicine, finance, and materials science, by allowing certain calculations to be performed much faster than is possible on classical computers.”

The last paragraph was: “In conclusion, quantum computing is a field that uses the principles of quantum mechanics to perform operations on data. It has the potential to revolutionize many fields by allowing certain calculations to be performed much faster than on classical computers. However, there are many challenges to overcome, including maintaining the integrity of the qubits and scaling up the number of qubits in a quantum computer. Despite these challenges, the field of quantum computing is rapidly advancing, and many researchers are optimistic about its potential.”

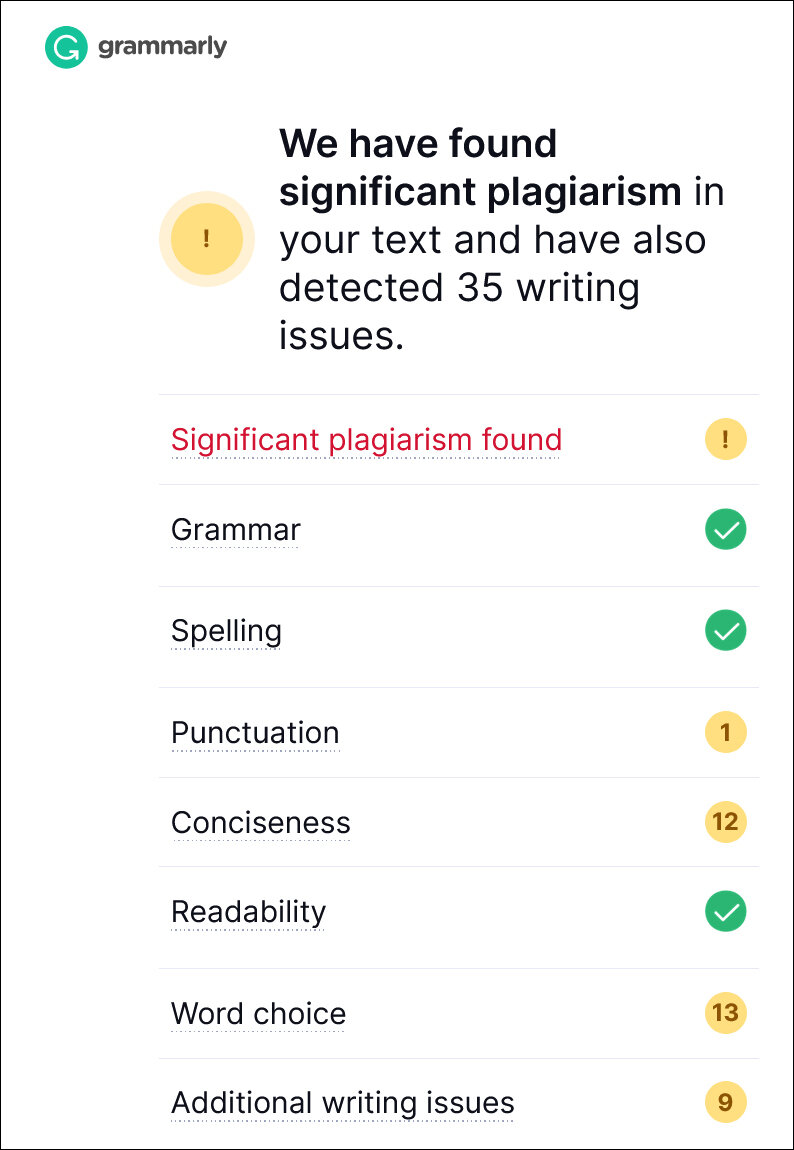

This is not only repetitive and boring, but blander than Wikipedia. But the biggest problem was when I ran it through plagiarism checkers. The bad ones found none, but Grammarly – which apparently checks 16 billion web pages – declared “Significant plagiarism found”. But more than that, it found 35 writing issues. My heart leapt with joy. Its writing is worse than mine in every parameter.

Generating Stories, Writing Scripts

I am also a screenwriter and a creator of screen stories. Naturally I had to try its story generating skill. I told ChatGPT to “Create a plot for a murder mystery set in Mumbai” It’s story title was “The Bollywood Murders” and went thus:

“When up-and-coming Bollywood star, Priya Patel, is found murdered in her dressing room, Inspector Vikram Singh is called in to solve the case. As he delves into the world of glitz and glamour, he finds that Priya had many enemies in the cutthroat industry, including her jealous co-star, Rohit Shah, and her arrogant director, Karan Mehra. However, things become even more complicated when a string of murders rock the Bollywood scene, all with links to Priya’s death. Inspector Singh must navigate through a sea of red herrings and fake leads to uncover the truth, aided by Priya’s feisty assistant, Aisha, and a retired detective with a personal connection to the case. As the investigation deepens, it becomes clear that the killer will stop at nothing to keep their secret, leading to a dramatic showdown that will leave you guessing until the very end.”

The story is pitifully inadequate, cliched and devoid of interesting plot points or twists which is crucial for mysteries. Worse still, Grammarly, once again, found it plagiarised. This is the greatest danger of asking ChatGPT to create a plot: chances are it is picking plot points from someone else’s story, a story that already exists and just recontextualising it. Not only is this unethical, if a plot point generated is found to be copied – and in today’s world it most definitely will – it will ruin a writer’s career.

But I didn’t give up yet. I read somewhere that you have to have a conversation with it, get it to fine tune the stories. I got it to create another story but was so underwhelmed even after detailed prompts and discussions, that I gave up. Another time I asked it to write a scene in a screenplay format from a scenario I had given. The dialogues were so insipid, I was left facepalming myself.

The results were no different when I tried to have it adapt an old story into the modern times. The result was downright atrocious.

However, where ChatGPT did prove useful in my screenwriting is when I used it as a sparring partner. In a film I am writing, I needed an incident to show the protagonist’s kindness. So, I asked ChatGPT to give me examples of humans being kind. I prodded it multiple times and each time it gave some insipid answers like donating blood, carrying bags, volunteering, paying for someone else, offering rides, complimenting strangers etc. None of that proved useful even after numerous questions. However, doing this exercise and reading its hare-brained ideas did trigger my own brain and I eventually came up with an original idea for the same.

Plagiarism Research

Turns out when it comes to testing ChatGPT or plagiarism, I needn’t have bothered. A research team led by Penn University undertook a study to check how language models that generate text in response to user prompts, copy content. They focussed on three types of plagiarism: verbatim i.e. direct copy pasting; paraphrasing or restructuring content, and the copying of ideas.

They tested this on GPT-2 and not GPT-3 whose data makes ChatGPT, because GPT-2’s training data is available online allowing researchers to directly check. Is it any surprise that they found all three types of plagiarism in the generated content. They also found that fine-tuned language models reduce verbatim plagiarism, but paraphrased liberally and copied ideas.

To use ChatGPT’s bad ending: “In conclusion” this LLM could be a good partner in creating jokes, understanding concepts, searches, and as a sparring partner. But when it comes to actual writing, it is the pits. The only ones who’ll actually use it to ‘write’ anything are kids who don’t know better. For the rest of us, sadly, it is back to what Hemingway called writing: ‘sit staring at the blank sheet of paper until drops of blood form on your forehead.”

In case you missed:

- Microsoft’s Quantum Chip Majorana 1: Marketing Hype or Leap Forward?

- Kodak Moment: How Apple, Amazon, Meta, Microsoft Missed the AI Boat, Playing Catch-Up

- Are Hallucinations Good For AI? Maybe Not, But They’re Great For Humans

- AI Adoption is useless if person using it is dumb; productivity doubles for smart humans

- Google’s Willow Quantum Chip: Separating Reality from Hype

- OpenAI’s Secret Project Strawberry Points to Last AI Hurdle: Reasoning

- Rise of Generative AI in India: Trends & Opportunities

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- Why a Quantum Internet Test Under New York Threatens to Change the World

- The End of SEO as We Know It: Welcome to the AIO Revolution