In a world that is rapidly changing with the advancement of artificial intelligence, deepfakes might be the next big problem, Adarsh speculates.

Rana Ayyub is an acclaimed Indian journalist based out of Mumbai. She has a column in the Washington Post and is also a published author of Gujarat Files: The Anatomy of a Cover Up. She is often in the news for being overtly critical of BJP, India’s ruling party and regularly takes them on in her column.

In April 2018, a pornographic video allegedly featuring Ayyub started circulating on WhatsApp and social media. It led to a lot of harassment and humiliation and even resulted in Ayyub being admitted in hospital with heart palpitations.

The person in the video however was not Ayyub. It was a deepfake of a porn actor with Ayyub’s face superimposed on it. While this might come as a surprise to many, this is hardly the first instance of a deepfake pornographic attack on a celebrity.

In the previous year, Hollywood actors Gal Gadot and Emma Watson had spoken out about their faces being used in deepfake videos. According to a sensity.ai report, 96 per cent of deepfakes are pornographic videos, with over 135 million views on pornographic websites alone.

What is a Deepfake?

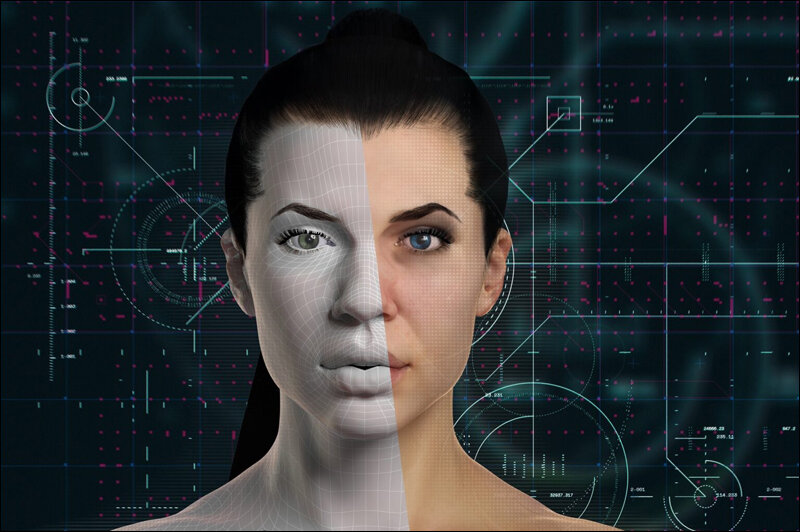

Deepfakes are digital media – video, audio, or images – edited and manipulated using Artificial Intelligence (AI). Deepfake technology employs the use of hyper-realistic falsification. In other words, it uses AI technology to alter existing or create new audio-visual content.

Deepfakes are usually developed to attack on individuals and institutions. Having access to commodity cloud computing and public research AI algorithms and the availability of vast media have created the perfect setup to democratise the creation and manipulation of media. This synthetic media content is referred to as deepfakes.

Deepfakes can have a lot of benefits, in areas such as accessibility, education, film production, criminal forensics, and artistic expression. Unfortunately, it is more often used to damage reputation, fabricate evidence, defraud the public and undermine trust in democratic institutions.

How a Deepfake is Created

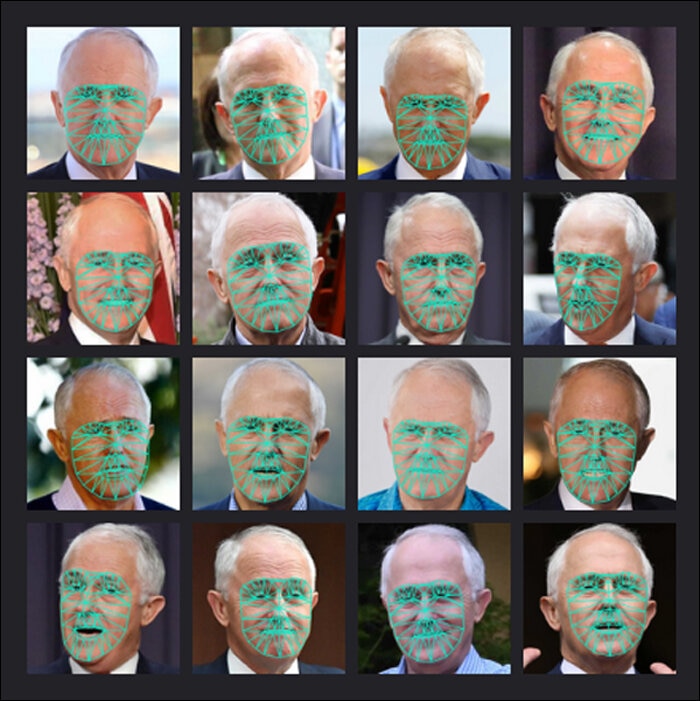

By using a neural network to examine and discover the patterns necessary for a convincing picture and by developing a machine learning algorithm from this, a deepfake can be created. The amount of data that is available for training is highly critical – the larger the dataset, the more accurate the algorithm. Unfortunately, large training datasets are freely available on the internet.

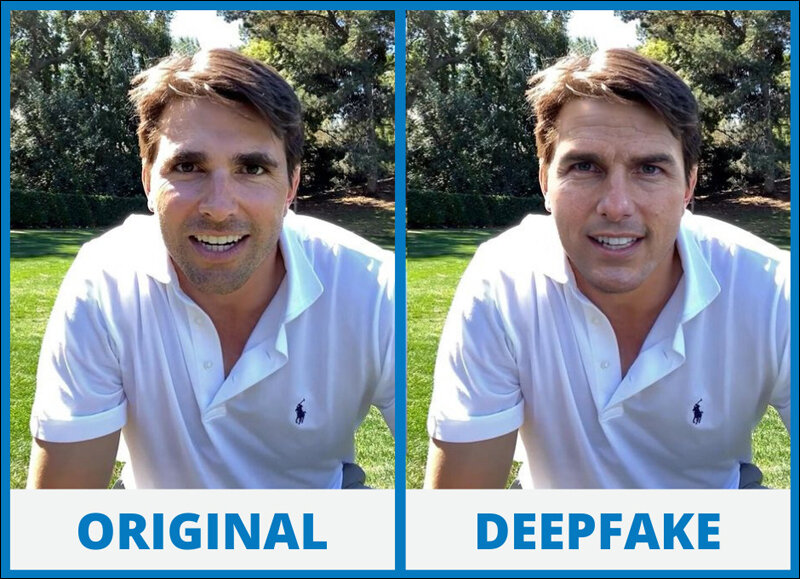

The quality of deepfakes and the threat generated have improved because of two recent developments. The first is the adaptation and use of Generative Adversarial Networks. GAN operates with two models: generative and discriminating. The discriminating model repeatedly tests the generative model against the original dataset. This results in a false image that cannot be detected by the human eye but is under the control of an attacker.

The second threat comes from 5G bandwidth and the computing power of the cloud which allows video streams to be manipulated in real time.

Problems Caused by Deepfakes

Deepfake videos can show people saying or doing vile things that they never actually did. Terrorist groups or insurgents can use this to show others making inflammatory speeches or being involved in controversial actions to create anti-state sentiments among the masses.

(Image Credit: Facebook)

Even if the victim somehow manages to prove that the video is fake, it would be too late to fix their tarnished reputation. Moreover, the very presence of deepfakes can lead to actual videos being dismissed as doctored. It lends more credibility to denials, and anything can be dismissed as a deepfake.

Deepfake pornography can threaten, intimidate, and cause psychological harm. Apart from threats of extortion or blackmail, it can also lead to emotional distress and other collateral consequences like job loss and reputation loss.

How to Detect a Deepfake

Detecting a deepfake is often very tricky. It would involve using technology to spot inaccuracies. Biological signals based on imperfections in the natural changes of skin tone caused by blood flow, imperfect correlation between word and mouth correspondence, irregular facial movements where facial and head movements do not correctly correlate and inconsistencies between the individual frames that comprise a video are ways to catch a doctored video. But this is easier said than done.

This video shows how difficult it is to catch a deepfake

A slight variation to malware may be enough to fool malware signature detection engines, so a slight alteration to the method used to generate a deepfake might also fool existing detection techniques.

Aside from that, compressing the deepfake video would reduce the number of pixels available to the detection algorithm and this would also make it harder to catch.

How to Handle the Deepfakes Issue

Recent research from iProove showed that 72% of people are still unaware of deepfakes.

Educating the masses and making people aware of the menace is the most effective tool to combat disinformation and deepfakes. There also need to be regulations with a collaborative discussion with the technology industry, civil society, and policymakers to develop legislative solutions to regulate, control and punish the creation and distribution of malicious deepfakes.

Social media platforms are also becoming wary of the deepfake issue. All of them have some policy or acceptable terms of use for deepfakes. But regulations will not be enough. There need to be easy-to-use and accessible technology to detect deepfakes, authenticate media and amplify authoritative sources.

Most importantly, everyone must take responsibility of being critical consumers of media and to double check before sharing on social media. The worst part is that the worst is yet to come. With AI advancing each passing day, these videos are bound to become increasingly realistic, and we are in for a tough time.

Strict laws and stringent regulations will need to be put into place or else we will have already lost this war against disinformation before it has even begun.

How do you think we can fight the deepfakes menace? Let us know in the comments section.

In case you missed:

- How to spot a Deepfake Video

- Cloudflare’s One-Click Solution for Image Verification

- You can Now Create a Video From a Single Image!

- How Meta AI stopped Lucknow Woman from committing Suicide

- TalkBack, Circle To Search & 3 More Google Features added on Android

- Psychosis stems from Video Game Addiction in Kids, reveals Study

- Why is Indian Education Sector facing Record Number of Cyberattacks?

- Is Tesla set to Launch Self-Driving Taxis in 2025?

- Samsung S25 Review: A Powerful Upgrade with AI Enhancements

- The Future of Online Marketing: Adobe introduces AI Agents