Technology evolves just as life does. Nigel Pereira looks into the evolutionary history of one such form of technology, the increasingly ubiquitous data center.

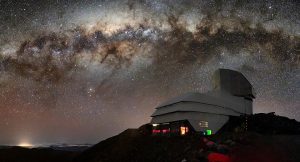

In simple terms, a data center is a dedicated space to house computer systems along with all the associated devices like switches, routers, servers, and storage systems. What essentially started off in the 1940s as a way for the US Army to calculate artillery fire and even develop the first thermonuclear bomb, has today become the backbone of what we call the internet.

While the physical architecture and technology have changed dramatically to cover the IoT (Internet of Things), Edge, and Cloud computing, the concept that critical data and applications can be stored and retrieved remotely remains pretty much the same.

Military Defense and the CIA

The first data centers were called ENIAC data centers and since they were primarily for military use, the whole affair was quite secretive, and not a lot of information was released to the public. This was around the time of the Second World War as well as the beginning of the Cold War when there was a mad scramble to innovate technology that could be used for defense.

That’s why It is not surprising that the US President of the time Harry Truman assigned engineers from the CIA (Central Intelligence Agency) to assist with the development of the ENIAC data centers.

If you’re imagining a room full of microcomputers, think again, this was before the invention of transistorized computers where everything was done with the help of a complex system of sophisticated vacuum tubes.

Yes, the first ENIAC system was made of 17,468 vacuum tubes, weighed 30 tons, and took up about 1,800 square feet of space, not to mention 70,000 resistors, 10,000 capacitors, and about five million joints that had to be hand-soldered. Compared to the ENIAC’s ability to execute 5,000 instructions per second, an iPhone 6 can do about 25 billion per second.

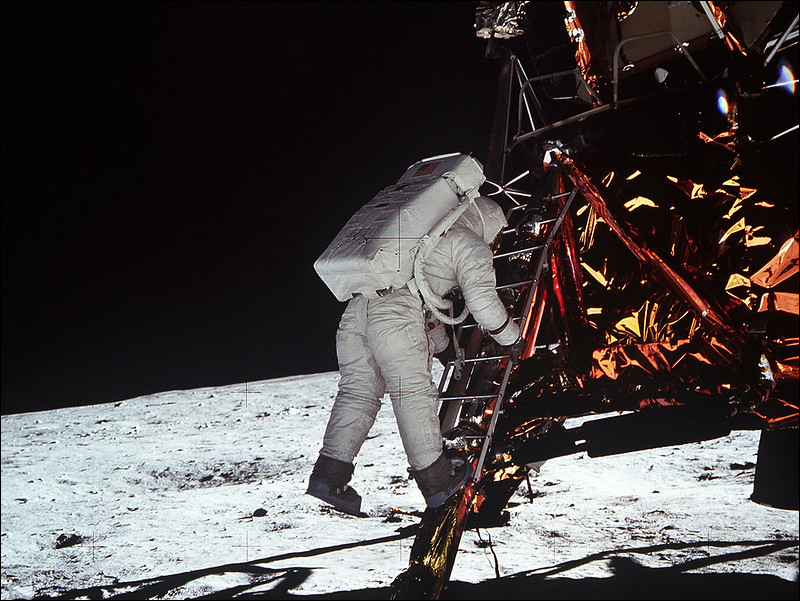

Transistors and the Moon Landing

Fast forward to around the early 1960s when Big Blue (IBM) makes its debut and invents the first transistorized computer that can be used for things other than defense and military. This computer was called TRADIC and with it, the elimination of vacuum tubes made it possible for computers to fit in office spaces and commercial settings. TRADIC is also the computer responsible for enabling NASA to put the first astronauts on the moon.

An interesting story here is the fact that it was a group of women who were really good at mathematics that trained these early computers.

Continuous innovation during the 60s led to the appearance of the first personal computer in 1971 called the Kenbak-1 with 256 bytes of memory, an 8-bit word size, and a series of lights and switches for I/O (Input/Output).

Though only 40 machines were built, and the company soon folded, this was an important innovation and paved the way for modern PCs. The 70s and 80s continued this trend with Apple, Intel Xerox, IBM, and even Japanese companies like Hitachi, Sharp, and NEC bringing us incrementally closer to the multimedia PCs of the 90s with color screens, CD ROMS, and speakers.

Server Virtualization

Coming back to data centers and how they have evolved over the ages, it wasn’t until the 1980s and the invention of the Unix Operating System that we had an actual client-server architecture that could be used to interface with servers around the world.

With the source code for the first web browser being released in 1993, websites had to be hosted on servers and servers had to support traffic leading to the first actual “modern” data centers comprising of thousands of servers built in stacks and surrounded by all the accessories and people needed to keep it running.

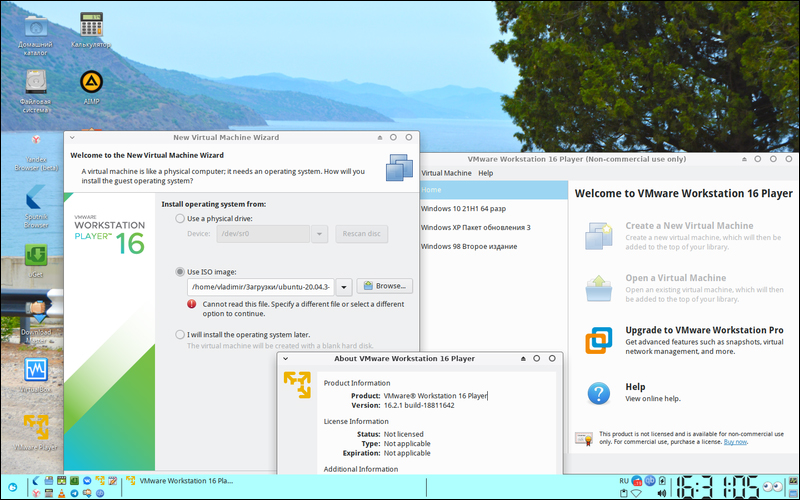

1999 was when VMware Workstation was born and with it the concept of virtual computing where you could have 2 or 3 different Operating Systems on one computer or server doing different things and supporting other websites.

This was followed by the first hypervisor called ESX Server 1.5 in 2002. This was a big step toward making web servers economical as up to this point there was no server virtualization and hosting physical servers was both expensive and inefficient. It can probably be argued that server virtualization is the idea that got us to where we are today with practically everything in the cloud being virtualized, from memory to storage, computing power, and even virtual networks.

The Hybrid Data Center

Today we have Hybrid Data Centers that can connect across multiple public Clouds like AWS and Azure as well as on-premises locations and private clouds. Additionally, modern data centers have also spread to the Edge where the processing of data can be done as close to the user as possible for time and speed-critical applications like medical devices, flight controls, or other critical sensors connected to the Internet of Things.

In conclusion, we are producing more data today than ever before and the data centers are going to have to keep innovating to keep up with the deluge of data that humanity can’t seem to do without.

In case you missed:

- This computer uses human brain cells and runs on Dopamine!

- China launches world’s first AI-powered underwater data centre!

- Slaughterbots: Robot Warriors in the Indian Armed Forces!

- Lab-Grown Brain Thinks It’s a Butterfly: Proof We’re in a Simulation?

- Mainstream AI workloads too resource-hungry? Try Hala Point, Intel’s largest Neuromorphic computer

- South Korean firm develops drone that flies on hydrogen fuel

- Scientists establish two-way Lucid Dream communication!

- Training AI for Pennies on the Dollar: Are DeepSeek’s Costs Being Undersold?

- Nvidia Project GROOT for humanoid robots

- A Glowing Plant Could Be Your New Night Lamp for $29