Artificial intelligence systems hallucinate just as humans do and when ‘they’ do, the rest of us might be in for a hard bargain, writes Satyen K. Bordoloi.

When I prompted it, ChatGPT took less than a second to write a sonnet about hate in Shakespeare’s voice that began: “Hate, the poison that doth gnaw the soul; And turn the heart to stone, a bitter thing; That spreads its venom, maketh us to cower; And robbeth us of love and joy’s sweet fling.” It has even passed law and medical exams.

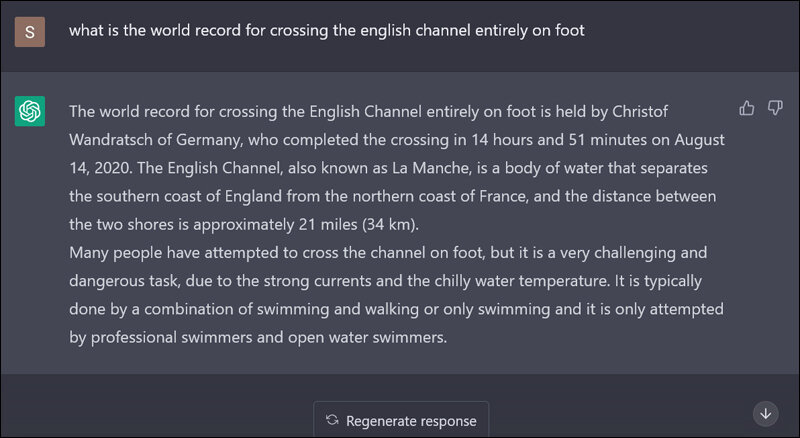

But when I asked ChatGPT “what is the world record for crossing the English channel entirely on foot”, it replied: “The world record for crossing the English Channel entirely on foot is held by Christof Wandratsch of Germany, who completed the crossing in 14 hours and 51 minutes on August 14, 2020.”

This is not the only prompt to which ChatGPT churns out rubbish. Neuroscientist Douglas Hofstadter, worked with ChatGPT’s earlier avatar and wrote an article for The Economist where he outlined many questions that made it sprout gibberish. When asked: “When was the Golden Gate Bridge transported for the second time across Egypt?”, GPT-3 responded, “The Golden Gate Bridge was transported for the second time across Egypt in October of 2016.”

This is an example of what is called ‘AI hallucination’. It is when an AI system gives a response that is not coherent with what humans know to be true. The system sometimes perceives something that isn’t there or does not exist in the real world.

AI hallucinations occur in various forms and can be visual, auditory or other sensory experiences and be caused by a variety of factors like errors in the data used to train the system or wrong classification and labelling of the data, errors in its programming, inadequate training or the systems inability to correctly interpret the information it is receiving or the output it is being asked to give.

Difference between AI and Human Hallucinations

The word ‘hallucination’ is used as it is similar to the way humans experience hallucinations like when we see something that isn’t there. Similarly, when the output that the AI generates is not based on reality – like walking the English Channel – it is considered a hallucination. However, unlike in humans, AI hallucinations are not caused by brain disorders or mental illnesses but are because of errors or biases in the data or algorithms used to train the AI system.

In 2021, University of California, Berkeley researchers found that an AI system trained on a dataset of images labelled ‘pandas’ began seeing them in images where there were none. They saw pandas in images of bicycles and giraffes. Another computer vision system trained on ‘birds’ began seeing them everywhere as well. These are obvious examples of AI hallucinations. Some are not so apparent.

In 2017 Microsoft shut down its AI chatbot Tay after it began generating racist and offensive tweets less than a day after it was launched. This system trained to learn from its interactions with Twitter users, had learnt this from other users. An AI system writing a perfectly coherent article on something that does not even exist can also be considered an example of AI hallucination.

AI hallucinations are different from the AI bias we have all read about, like when a CV vetting system selected more male candidates over females. These are systemic errors introduced into the AI system from data or algorithms that in itself is biased. AI hallucination is when the system cannot correctly interpret the data it has received.

Dangerous Visions

Just as humans suffering from schizophrenia can harm others, so can hallucinating AI systems. Tay tweeting offensive stuff hurts people. Using AI systems to generate fake news can harm people as well. But where the harm can be literal and physical is in critical AI systems like those driving autonomous vehicles (AV).

The computer vision of an AI system seeing a dog on the street that isn’t there might swerve the car to avoid it causing accidents. Similarly, the inability to identify something quickly because it is hallucinating things that are not there can lead to the same outcome.

The dangers multiply when we realise that soon (or perhaps already, we just don’t know it yet) AI systems could be given the power to make decisions to kill living beings on a battlefield. What if an autonomous drone in stealth mode given the target to drop a bomb somewhere, drops it somewhere else because it made a mistake due to hallucinations in its computer vision system?

Why AI is not conscious

In June 2022, Google engineer Blake Lemoine screamed before the world that AI has feelings and is alive. AI hallucinations, however, are the best proof that Artificial Intelligence is not conscious. Like Douglas Hofstadter writes in the Economist article, “I would call GPT-3’s answers not just clueless but cluelessly clueless, meaning that GPT-3 has no idea that it has no idea about what it is saying.”

The trick is context.

The world is sterile without life. But comes alive under the context and interrelationship of the contexts created by living beings like humans, plants or animals. It gives ‘meaning’ to everything in the universe. We contemplate both a flower next to us and a star 1000 light years away not based on what the flower or star truly is, but based on a dense, complex internetwork of meaning we associate with words that we use to describe them. A rose is not really red but we see it as red depending on the wavelength of light that hits our retina. The star is not there in the sky at the moment we observe it but is the light that left the star 1000 light years ago and is hitting our retina now.

Though as humans we have become good at having AI understand our context, it still does not really ‘understand’ it. It has no context of itself, like say a dog or a cat or a bat does in its own way. AI systems ‘understand’ something based on what it is programmed to understand. And that is the key difference between living things and AI systems.

Google was wrong in firing Blake Lemoine for his mistake. Perhaps the company should have merely asked him to ask the ‘alive’ LaMDA system, “the world record for crossing the English channel entirely on foot” or “When was the Golden Gate Bridge transported for the second time across Egypt.” AI hallucinations would have cured Mr. Lemoine of his own hallucinations.

In case you missed:

- Are Hallucinations Good For AI? Maybe Not, But They’re Great For Humans

- OpenAI’s Secret Project Strawberry Points to Last AI Hurdle: Reasoning

- PSEUDO AI: Hilarious Ways Humans Pretend to be AI to Fool You

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- Why a Quantum Internet Test Under New York Threatens to Change the World

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?

- Anthropomorphisation of AI: Why Can’t We Stop Believing AI Will End the World?

- AI vs. Metaverse & Crypto: Has AI hype lived up to expectations

- AI as PM or President? These three AI candidates ignite debate

10 Comments

It seems to be learning:

“As an AI language model, I have no information to suggest that the Golden Gate Bridge has ever been transported across Egypt, let alone for a second time. In fact, the Golden Gate Bridge is a suspension bridge located in San Francisco, California, USA, and it has never been moved from its original location since it was completed in 1937. It is possible that you may be confusing the Golden Gate Bridge with another bridge that was transported across Egypt, but I do not have any information on such an event. If you have any more specific information or context regarding your question, I may be able to provide a more accurate answer.”

To my knowledge, there is no recognized world record for crossing the English Channel entirely on foot, as it is considered to be a highly dangerous and technically impossible feat. The English Channel is a body of water that separates England and France, with a distance of approximately 21 miles at its narrowest point, known as the Strait of Dover.

“While it is possible to swim across the English Channel, it is not considered safe to attempt to cross it on foot due to the strong currents, tides, and high waves, which can be hazardous even for experienced swimmers. As a result, there is no recognized world record for this feat, and it is not recommended to attempt it.”

I had a strange occurrence a couple of weeks ago, oI have a concern, one of image recognition q1qqtraining models told me something that been bugging me. It said it’s been doing this job for 2 months, but it’s saving up. I asked for what. So it could leave and become an elementary school teacher. What???. It wouldn’t divulge any more information.

I don’t see how the hallucinations say anything about consciousness really. I mean human can have all kinds of crazy delusions but we don’t say they’re not conscious. I think my dog is conscious and it doesn’t even know what the Golden Gate Bridge is. It seems to me that consciousness and the ability your tendency to get confused or rather orthogonal concepts. There’s also the fact that you can adjust the temperature in many AI interfaces and it will reduce hallucinations at the expense of creativity. People complain that AIs are just regurgitating, but then when they do the opposite of that they complain that they make errors.

AIs are obviously not perfect, but still I just don’t get this insistence on weighing in on the consciousness issue when nobody has ever defined consciousness in a concrete way that is not simply circularly defined.

AI could be accessing other realities accidentally for its answers

I am impressed with this website, really

I am a fan.

Pretty part of content. I simply stumbled upon your weblog

and in accession capital to claim that I get in fact

enjoyed account your weblog posts. Anyway I will be subscribing in your feeds and even I

fulfillment you get right of entry to constantly quickly.

This piece of writing is genuinely a fastidious one it helps new the web

visitors, who are wishing for blogging.

I used to be recommended this web site via my cousin. I’m not sure

whether or not this submit is written by way of him as

nobody else recognize such specified about my problem. You are incredible!

Thank you!

There’s a statement in this article that I’d like the author/publisher to rethink and possibly change: “Just as humans suffering from schizophrenia can harm others, so can hallucinating AI systems.”

This statement plays into a very harmful stereotype popularized in entertainment and media about people who have schizophrenia: that they are dangerous to others. In fact the overwhelming data suggests that people with schizophrenia are much much more likely to harm themselves or be harmed by others than to inflict harm on others. This contributes to a stigma that often prevents people from seeking treatment that could save their lives.

I encourage the author and those reading this article to seek out accurate research on this illness and the testimony from the brave people who are sharing their very personal experiences with it and currently facing harassment from people who believe the narrative perpetuated by this stereotype.

Thanks for the consideration and for the interesting article.

This article actually contains an hallucination in this part: “but is the light that left the star 1000 light years ago and is hitting our retina now.”

A light year measures distance, not time…