With Strawberry, OpenAI aims to complete their GenAI ‘Quadrinity,’ writes Satyen K. Bordoloi as he outlines what it could mean for Artificial Intelligence specifically, and the world generally.

For AI doomsayers of the Terminator and Matrix variety, the very combination of the words Artificial and Intelligence signifies a level of logic and reasoning ability found only in humans. Those who either code AI or study it beyond anthropomorphic fears know that even when AI manages to do a surprising quantity of tasks, basic tasks that even a toddler can do elude it. Training AI to achieve human-level reasoning is thus the holy grail, the ultimate fantasy, for AI coders and developers.

That is where the news of OpenAI working secretly under the codename ‘Strawberry’ to try and develop ‘reasoning’ in their AI systems has the world excited. The idea is to enhance the reasoning capabilities of its artificial intelligence models to reach human-like reasoning and logic.

The Challenge of Reasoning in AI:

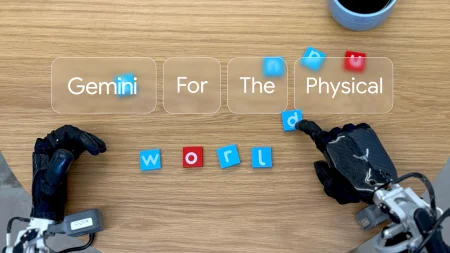

While AI models like ChatGPT, Gemini, Llama, etc., can generate text and answer queries, they do so with borrowed, mass-produced ‘logic.’ These systems have been ‘taught’ to find the word or ‘pixel’ that most likely comes after another word or ‘pixel’ based on the structure of the keywords in the query, training, and grammar of the language it has been asked in, or the image/video and its style it’s been asked to create. Tasks that require actual reasoning are tough. I found this while writing an article on AI Hallucination for Sify. When asked how long it would take to cross the English Channel, ChatGPT couldn’t comprehend that humans cannot walk on water. ChatGPT was taught that logical connection after this blunder came to light.

‘Logic’ and ‘reasoning’ are words AI can’t logic or reason about because they do not understand their meaning or context. That’s because alphabets and words are a system of symbols interconnected in a complex web where the meaning of not a single word or symbol stands disconnected from the meaning and context of other words and symbols. Together the weave is so dense and complex, it seems beyond reach to achieve artificially.

Human brains have evolved over millions of years to create an intricate, complex weave of the physical brain to match the same of symbols and information in the outside world. To stitch that same pattern into an AI system is complex simply because of the infinite connections the meaning of a single symbol represents amidst the whole. We have ‘taught’ these systems a good number of these connections so far. But the entire network of connections is far too complex for any machine to reach today. Perhaps with further advancements in computing, especially the rise of quantum computing, especially higher computation with lower power consumption, we might get close.

That does not mean AI companies won’t try today.

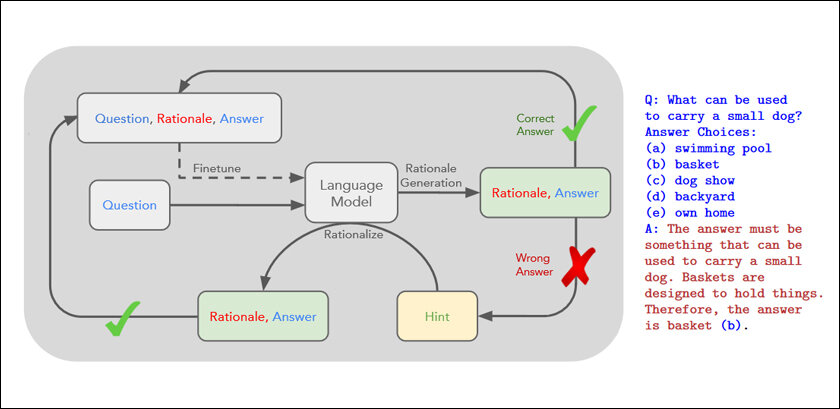

(Image Credit: NeurIPS Proceedings)

Project Strawberry – A New Approach:

Project Strawberry represents OpenAI’s latest effort to overcome these challenges. According to internal documents and sources familiar with the matter as reported by Reuters, Strawberry aims to enable AI to perform “deep research” by autonomously navigating the internet and planning ahead. This capability has eluded AI models till date, making Strawberry a potentially significant breakthrough.

The specifics of how different the functioning of AI under Strawberry would be is a closely guarded secret within OpenAI. However, it is expected to involve a specialized way of processing AI models after they have been pre-trained on large datasets. This post-training phase, known as “fine-tuning,” adapts the base models to improve their performance in specific ways. As per the Reuters report, Strawberry’s approach is similar to a method developed at Stanford called “Self-Taught Reasoner” (STaR), which allows AI models to iteratively create their own training data and potentially achieve higher intelligence levels.

The Importance of Reasoning:

Researchers agree that improving reasoning in AI models is crucial for achieving human or higher-than-human levels of intelligence. In AI terms, this would be the much-mythologized AGI – Artificial General Intelligence. Reasoning is expected to enable AI to plan ahead, solve complex problems, and make scientific discoveries beyond the mind-boggling array of discoveries it is already creating. OpenAI CEO Sam Altman had earlier emphasized that progress in AI reasoning ability is one of the most important areas of development not just for his company, but AI in general.

Scepticism About Project Strawberry:

Almost every week, especially if you follow mobile developments, your news feed will be stuffed with some or the other ‘leaks’ about a new product launch. These are not actual exposes but what is known in public relations as ‘Strategic Press Leaks’ or plain ‘Leaks.’ These are well-coordinated, well-timed press relation exercises engaged in by the company. It’s a win-win-win, as media outlets get more clicks, companies get more footfall to the products instead of a boring release, and consumer interest is tickled.

One cannot rule out that the ‘Leak’ of project Strawberry from a ‘source’ to Reuters could be one such strategic leak. As it stands, OpenAI has time and again proven itself to be a master of PR strategy and marketing. They stole the march on Google, Meta, and others first with DALL-E, six months later with ChatGPT, and this year with Sora. Sora, even after six months of announcement, has not yet been released for the public even when companies like Luma with its Dream Machine, Kuaishou Technology with Kling, and recently Runway with Gen-3 Alpha, have launched stunning text-to-video products anyone can use for free and with payment.

The ‘leak’ of Strawberry could be one such PR activity for OpenAI. Every such ‘leak’ boosts investor confidence in the company and drives up valuation. The fact is that every GenAI company worth its name is working on some or the other ‘reasoning’ ability for their systems.

Yet, the mere fact that OpenAI is calling attention to this aspect is good for the world and AI. As a market leader, they are, for at least the fourth time in exactly two years, driving the conversation and the direction of the generative AI market. Text-to-image i.e. Dall-E was first. Text-to-paragraphs – wink, wink for ChatGPT – came second. Text-to-video was Sora. And now the fourth is text-to-reasoning. Holy trinity? No. OpenAI is aiming for Holy Quadrinity.

Future Prospects:

OpenAI hopes that Strawberry will dramatically enhance its AI models’ reasoning capabilities. Creating, training, and evaluating models on a “deep-research” dataset will allow AI systems to conduct research autonomously. This capability could revolutionize how AI models are used, from scientific research to software development. Basically, a stronger reasoning ability will bring it closer to behaving like a human. Any task we can do in the digital domain, the AI system will be able to do for us. This will make it get closer to creating the perfect personal assistant that will shoot up the use of AI exponentially.

Like touchscreens making smartphones ubiquitous, enhanced reasoning will explode the use of AI in our world beyond anything we can imagine right now.

Nothing is more pleasurable than giving AI doomsayers fodder to hyperventilate. But beyond their deep pessimism and the super-optimism of Silicon Valley tech-bros lies a ridge where the mythical ‘AI reasoning’ rests. Doomsday is a scenario we are bent on reaching, with or without AI. Before that, there are a million other problems we need to solve, and fast. And for that, we can only hope that projects like Strawberry manage to create AI reasoning within a reasonable time.

In case you missed:

- Rethinking AI Research: Shifting Focus Towards Human-Level Intelligence

- Why is OpenAI Getting into Chip Production? The Inside Scoop

- A Manhattan Project for AI? Here’s Why That’s Missing the Point

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

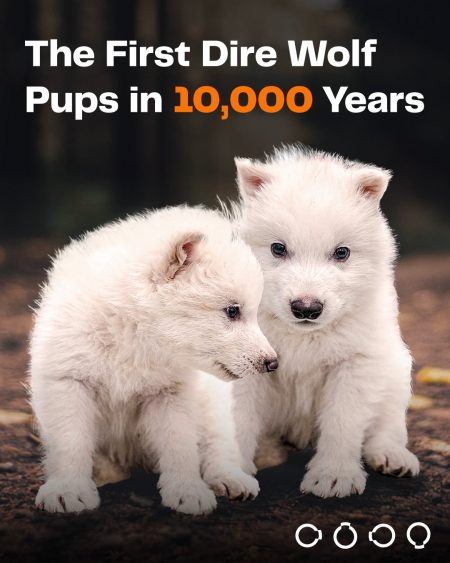

- Bots to Robots: Google’s Quest to Give AI a Body (and Maybe a Sense of Humour)

- Project Stargate: Dubious Origins in the 1970s to AI Goldrush in 2025

- Are Hallucinations Good For AI? Maybe Not, But They’re Great For Humans

- The Rise of Personal AI Assistants: Jarvis to ‘Agent Smith’

- Apple Intelligence – Steve Jobs’ Company Finally Bites the AI Apple

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?