Once thought of as science fiction, artificial intelligence (AI) is now a reality that is becoming more embedded in our everyday lives…

The overall applications are wide-ranging, from social media to banking and more. However, it has also led to confusion surrounding the related technologies, especially AI, neural networks, machine learning, and deep learning, with the terms being used interchangeably often.

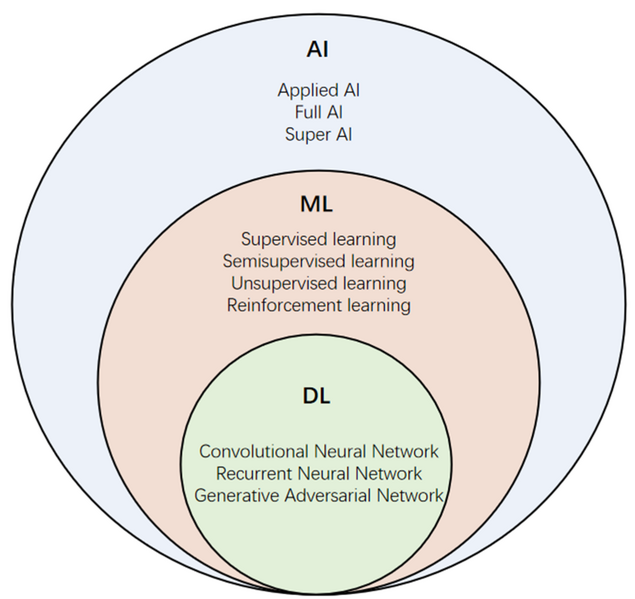

Perhaps the best way to understand their differences is via the Russian nesting doll analogy, meaning that all concepts are subsets of the prior. That is to say, they are a series of AI systems ranging from the biggest to the smallest, with each larger concept encompassing the smaller one. This article unravels this system, detailing the differences between these technologies.

All about AI

Artificial Intelligence focuses on creating “intelligent” machines that can perform tasks that usually require human intelligence. These include decision-making, perception, problem-solving, learning, etc. The concept is not exactly new — it dates back to the 1950s. As decades passed, the technology has evolved significantly, thanks to advancements in data availability, algorithms, and computing power. So, what are the types of artificial intelligence?

- Symbolic AI: This is rule-based or classical AI that employs logic and a set of rules to make decisions and represent knowledge. It operates on predefined patterns and symbols and is especially suited for expert systems and rule-based reasoning. However, it struggles with tasks requiring learning from data or involving uncertainty.

- Machine Learning (ML): This subset of AI focuses on developing models and algorithms and learning from data, improving over time without any outright programming. This approach involves training models on labelled datasets to make predictions by recognizing patterns. ML algorithms include reinforcement, unsupervised, and supervised learning, among others.

- Deep Learning (DL): This subset of ML employs multiple-layered neural networks to learn complex hierarchical representations from data. These networks are instrumental to DL, as they process and extract features from raw data to perform tasks like natural language processing, speech recognition, image recognition, and more. DL technology excels in tasks involving large amounts of data and requiring high levels of abstraction and accuracy.

All about Neural Networks

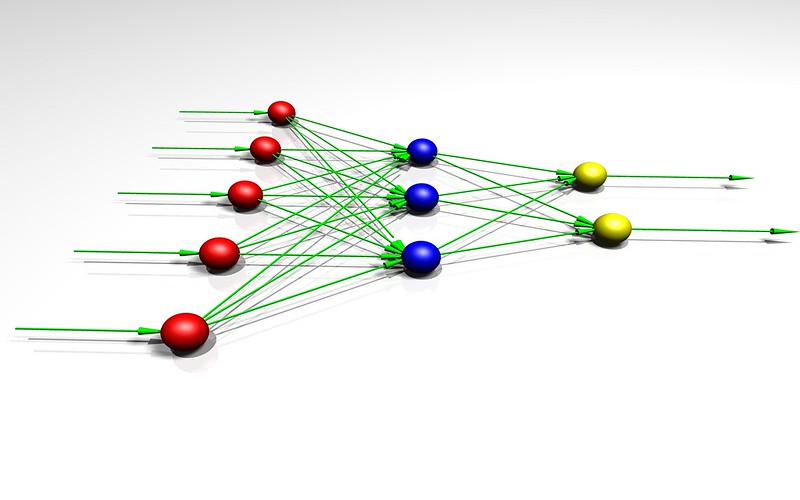

Neural networks are specific kinds of algorithms that draw inspiration from the design, build, and function of the human brain. They comprise interconnected neurons or nodes arranged in layers, with every one of them connected to the others via weighted connections. Neural networks perform computations by processing input data via the interconnected nodes, just like the brain processes information via signals transmitted between neurons. Moreover, they can make decisions, adapt, and learn, just like the brain, a process that involves changing and fine-tuning the weights of these connections based on the data inputted during training. Here is an overview of the kinds of neural networks:

- Recurrent Neural Networks (RNNs): These are suited for processing sequential data, such as time-series predictions and natural language processing tasks.

- Convolutional Neural Networks (CNNs): These are usually employed for computer vision and image recognition tasks, as they are effective at detecting features and patterns in visual data.

Other kinds include Generative Adversarial Networks (GANs), Long Short-Term Memory (LSTM) networks, and more, with every last one of them possessing unique capabilities and architecture.

Major Differences between AI and Neural Networks

As we mentioned earlier, AI simulates human intelligence and cognitive skills in machines through a wide range of methodologies and technologies. On the other hand, neural networks comprise artificial nodes/neurons and are a specific kind of AI technology that can adapt, train, and learn. Despite playing a crucial role in AI app development, neural networks are not the only techniques to do so; there are others like reinforcement learning, genetic algorithms, and expert systems.

Perhaps the most critical benefit of neural networks is that they can easily and readily adapt themselves to changing output patterns. So, one does not have to adjust it each time based on the input supplied, thanks to supervised/unsupervised learning.

Choosing between Neural Networks and AI: The Future

To sum it up, AI is the overarching structure, with ML as a subset of AI and DL as a subset of ML. Neural networks are the backbone of these very DL algorithms, with the number or depth of node layers distinguishing a single network from a DL algorithm (that should have more than three).

In the end, the differences between the two lie in their functionality and scope. While AI is a broader concept, the second one mimics an interconnected structure to process the data to be fed into the concept. When comparing the two, it is important to consider the complexity of the task involved. While AI is better suited for tasks requiring adaptive learning and general intelligence, neural networks are best for work that involves predictive analytics, speech/image processing, and pattern recognition.

In case you missed:

- AI-Red Teaming: How Emulating Attacks Help Cybersecurity

- AI+ genomics – Revolutionizing the healthcare industry

- Wildlife Conservation: Is AI Changing It For The Better?

- AI and Networking Infrastructure – 2024 Trends

- Zero Trust Architecture: The Next Big Thing In Security

- The Ethics of AI in Healthcare

- AI Firewalls and How They Protect Your Data

- Enterprise Network Transformation: Benefits and Challenges

- Biohybrid Robots Are Here. Is Humanity Prepared?

- AI Just Found a Lost City, is Archaeology Seeing a Digital Revolution?