As Mark Zuckerberg promises to make Threads a ‘friendly place’ run on ‘kindness’ in a reversal of social media wisdom, Satyen K. Bordoloi wonders if social media AI can indeed be programmed on ideals of goodness and will their owners accept it if they are.

The Wall Street Journal recently did a story on Kenyan workers who weeded out and labelled violent, false and misleading content on ChatGPT’s training data. They were so traumatised doing this, their lives became a mess, even though it ensured ChatGPT worked better. But this shows us the dark side of AI: where it is easier – and as we shall see later – profitable, to let AI do the opposite i.e., learn and promote the violence they encounter.

(Image Credit: Wikipedia)

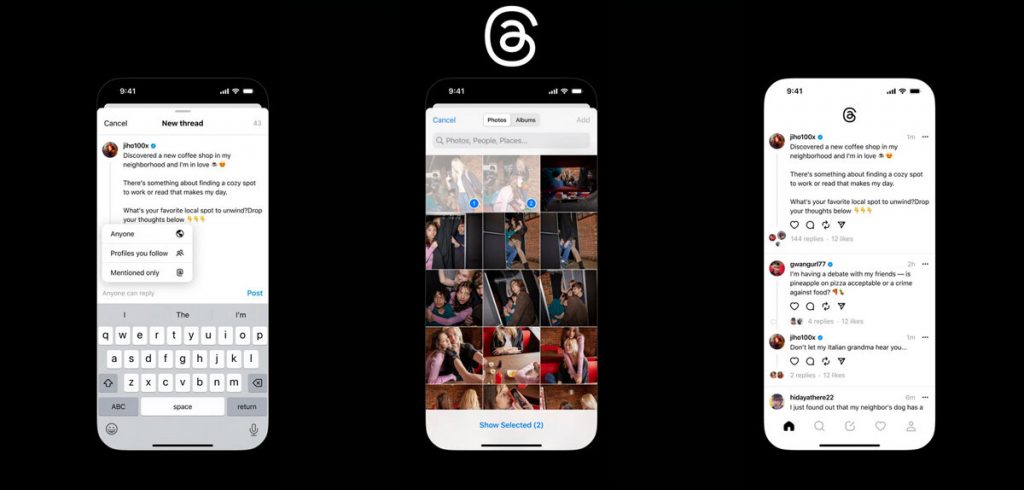

When Mark Zuckerberg launched Threads, it quickly beat the record held by ChatGPT, to touch 100 million users in 5 days. As a competitor of Twitter that has mostly become a cesspool of violent, extremist, nasty and conspiracy content – starting from owner Elon Musk – Zuckerberg said he’ll have Threads focus on ‘kindness’ and be a ‘friendly place’. This drew laughter, scorn, ridicule, condemnation, accusation of hypocrisy, and memes.

That’s because every social media so far has grown by promoting the opposite. Not passively, but actively where the AI algorithms responsible for recommendations, enthusiastically promote bad content only because it drives engagement. In such an atmosphere, can AI be taught to do the reverse? Even if it can, going by his own past history, will those like Mark Zuckerberg accept it?

THE FAULT IN AI STARS

Like calendars having two eras – BC and AD i.e. Before Christ and After Death – every Web 2.0 platform – be it social media or content sharing and uploading platform, also have a BA and AE – Before AI and After Engagement. AI exploded into our world sometime in 2012. Over the next few years, every social media and content platform realised what an important tool it was in its arsenal.

In the Before AI era i.e., pre-2012, Facebook, YouTube, Twitter and their ilk, showed you content from your friends, subscribers or followers list making them a vital tool in ally-building and solidarity. But in the After Engagement era i.e., post-2012 with AI, you began seeing content you hadn’t subscribed to but were similar to what you had already consumed. Thus, began the era of engagement, culminating in the influencer economy and engagement-driven businesses at the expense of originality and quality.

These Web 2.0 leaders quickly figured out the type of content that made people stay: those that enraged, pitted one against the other, drove polarisation, and on which one could take right or wrong and for or against stands. Kindness and friendliness were abandoned because that made for quick, short, unengaging content. While angry content drives passion, forcing you to react, comment, share, like, hate, etc. If someone was nasty to you, you stayed up all night fighting, as comments flew like bullets back and forth. You lost sleep, your BP and sugar levels shot up, but you were ‘engaged’. You stayed connected and lingered on the social media platforms exponentially longer than you would if the content or commentators were ‘kind’ or ‘friendly’.

As it works in cinema, series, documentaries, short films etc., these platforms figured out that what works for them is the same – conflict. The only issue: in films, series etc., there’s mostly a resolution or closure of that conflict in the end. On social media, there isn’t any and you jump from one conflict to another endlessly, nothing worthwhile ever coming out of it.

THE MURDER AND MAYHEM UNLEASHED BY SOCIAL MEDIA

In November 2017, 14-year-old Molly Russell ended her life. Seeking answers, her parents checked her social media and were stunned to see unfettered access to self-harm content on Instagram and other platforms. Molly’s case is well known because her parents raised a stink. There are thousands, if not scores of thousands of such cases across the world on each social media platform that never get reported. And the worst culprit of them is the man today touting ‘kindness’ and ‘friendliness’: Mark Zuckerberg with Facebook.

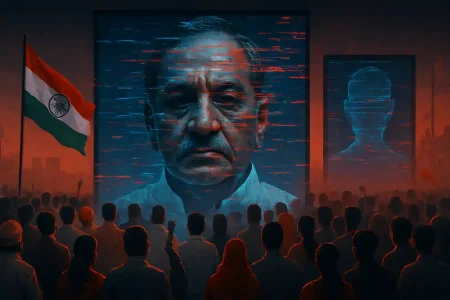

In 2017, for people in Myanmar – the internet meant Facebook. Everything internet in that country happened through Facebook – ironically, even the genocide of the Rohingyas. At least 24,000 Rohingyas lost their lives thanks in large part due to lies and falsehoods propagated on Facebook that the company refused to take down fearing it would hurt growth.

The story of lies, violence, misinformation, propaganda, and conspiracy theories spread through social media spans the globe: from Brexit in Britain to the US presidential election of 2016, from those in South America to the troll farms in Russia, India and many African and Asian countries run by the state to spread its propaganda and often misinformation.

What is common to all these, be it the violence of Facebook in Myanmar and Cambridge Analytica scandals in Brexit and the US Presidential election, to the deaths of those like Molly Russel, is Artificial Intelligence. It was mostly curated by AI under the watchful eyes of humans. Hateful and violent content inspires engagement in platforms and their owners – particularly Mr Zuckerberg – have not only not done anything about it, but actively tweaked their own AI algorithms to encourage it.

The question is thus this: in the age of social ruin and destruction unleashed by the misuse of AI by most Web 2.0 corporations, can AI of Web 3.0 be trained to do the opposite?

THE WAY WAY BACK FOR AI AND SM

The first and foremost thing to remember – AI doomsayers be doomed – is that AI is not a living entity but the smartest type of program humans have created to assist us in our work. As the example of the workers from Kenya struggling to keep violent content out of ChatGPT shows, AI can be programmed to do anything, including make social media like Threads friendly and kind. It is just a matter of coding. That is the easy thing. All it’ll take is a minuscule fraction of Meta’s huge coffers, some nerdy smart Silicon Valley brains and cheap English-speaking labour like those in Africa and India to pull it off. That is not the hard part.

The difficult bit is that this is not engaging. Two people being kind to each other ends the conversation and back-and-forth comments quickly. But two people in conflict will go hammer and tongs at each other, forgetting day and night as this writer has himself engaged in in the distant past. And let’s not forget that on social media, most of us love to be trigger-happy. This includes everyone – those from the conspiracy-laden right-wing ecosystem to the so-called liberal, left-wing groups. Everyone in the world is insecure and almost everyone finds validation on social media by proving that the other person – whoever they may be – is an idiot of the highest ‘calibre’ for believing what they do. Remember the trench warfare of World War I where enemy combatants took shots at each other from holes dug barely a few hundred feet from each other for four years? That’s the perfect analogy for social media except here the trenches are deeper, no one dies from direct wounds and while World War I lasted 4 years, World War Social Media is endless.

Yet, Meta can actually keep its promise. 100 million subscribers in five days mean they have already reached one-third of the subscribers on Twitter. If there’s anyone who doesn’t need bigger numbers and false engagement to grow, it is Threads and its parent company Meta. They can truly lay down the rules for a new kind of platform where kindness and niceness are promoted by its AI algorithms. But will the man who when making Facebook called people who submitted data to him ‘Dumb F***s’ because ‘They “trust me”‘, actually make Threads kind and friendly?

Maybe. Maybe not. Yet, the truth cannot be denied. That these corporations and their profit-at-all-cost masters with bloated egos don’t create social fault lines. They merely exacerbate it to mine it for their own profits. Hence, it is also up to all of us to change our behaviour and show that kindness and friendliness on social media can indeed be engaging, and thus profitable. But will we be up to the task? Only time – that we spend on Threads – will tell.

In case you missed:

- Manufacturing Consent In The Age of AI: Simple Bots Play

- Australia Tells Teens To Get Off Social Media; World Watches This Prohibition Experiment

- To Be or Not to Be Polite With AI? Answer: It’s Complicated (& Hilarious)

- 75 Years of the Turing Test: Why It Still Matters for AI, and Why We Desperately Need One for Ourselves

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- Greatest irony of the AI age: Humans being increasingly hired to clean AI slop

- Hey Marvel, Just Admit You’re Using AI – We All Are!

- The Verification Apocalypse: How Google’s Nano Banana is Rendering Our Identity Systems Obsolete

- The End of SEO as We Know It: Welcome to the AIO Revolution

- AI Taken for Granted: Has the World Reached the Point of AI Fatigue?