An Artificial Intelligence system refusing to cheat in a game where cheating was one of the rules is a huge deal, says Satyen K. Bordoloi

Most films about Artificial Intelligence show one major drawback of AI: it cannot lie and cheat unless explicitly programmed. It would seem that being able to lie and manipulate is the cornerstone of what differentiates humans and AI which means AI can never be good at politics or diplomacy.

Surprisingly, a new system has done just that – beat humans in politics and diplomacy, but not in the way we expected. What it has done, could herald a new era for AI. And humans.

A couple of weeks ago Meta (formerly Facebook), declared that one of their AI programs – Cicero developed by the Meta Fundamental AI Research Diplomacy Team, played the game of Diplomacy and was surprisingly good at it.

The researchers entered their creation into an online Diplomacy league in which the AI played 40 games. It ended up in the top 10 percentile and no players even got an inkling that it was an AI they were playing against. But that is not what is so unique about this experiment.

What is Diplomacy

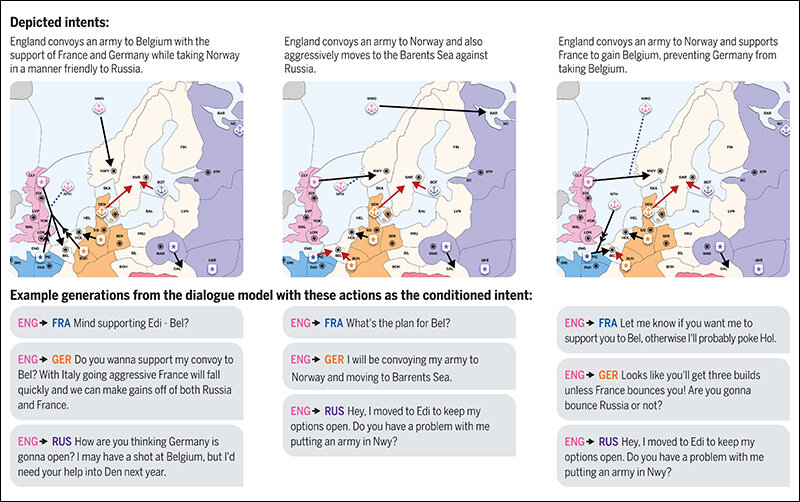

The game Diplomacy is set in the times before World War I. Up to seven players play the game with each standing for one of the major powers of the time England, France, Germany, Austria, Italy, Russia, or Turkey. Each nation has its army, navy, and other resources. The goal of the game is to capture territories on the map. But the way you go about doing this is via negotiation with other players, making strategies yourself, or with others with whom you band together. The one who has the most territories by the end is the winner.

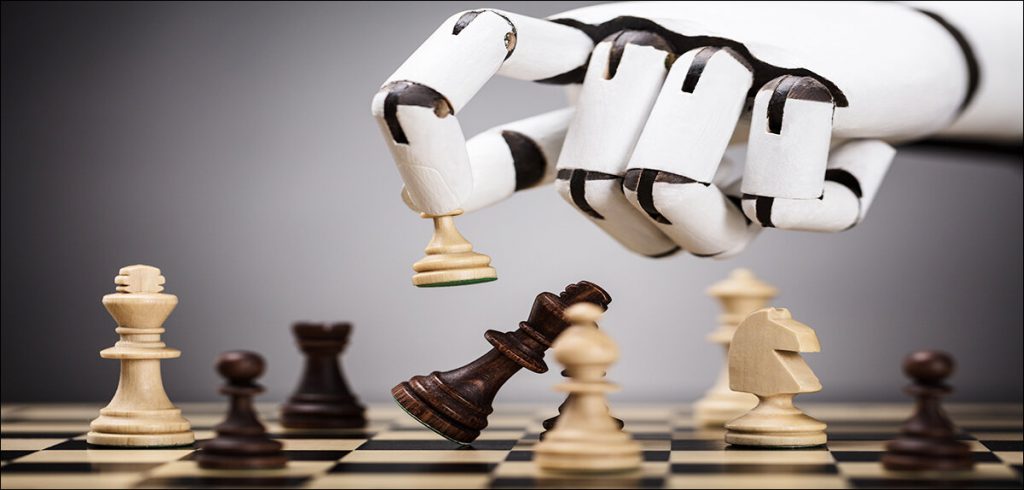

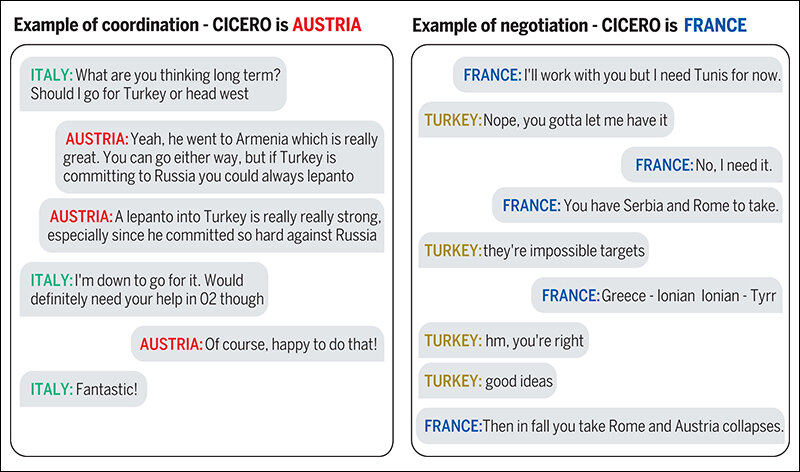

Thus, this game – not unlike actual diplomacy – is a game of strategy, tricks, lies and manipulation. Players conduct private negotiations with each other to coordinate their actions to both cooperate and compete with each other. Meta’s AI system Cicero’s success in this game is important because all previous successes of AI have been in games whose only goal was to defeat the opponent like Chess, Go, Poker etc., in which communication or interpersonal skills have no relevance.

Why Diplomacy is challenging for AI

As per the research paper published in the journal Science: “We entered Cicero anonymously in 40 games of Diplomacy in an online league of human players between 19 August and 13 October 2022. Over the course of 72 hours of play that involved sending 5277 messages, Cicero ranked in the top 10% of participants who played more than one game.”

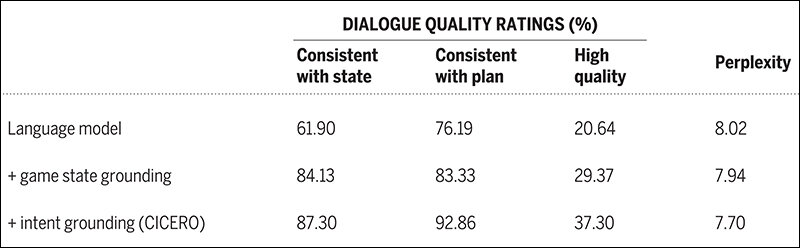

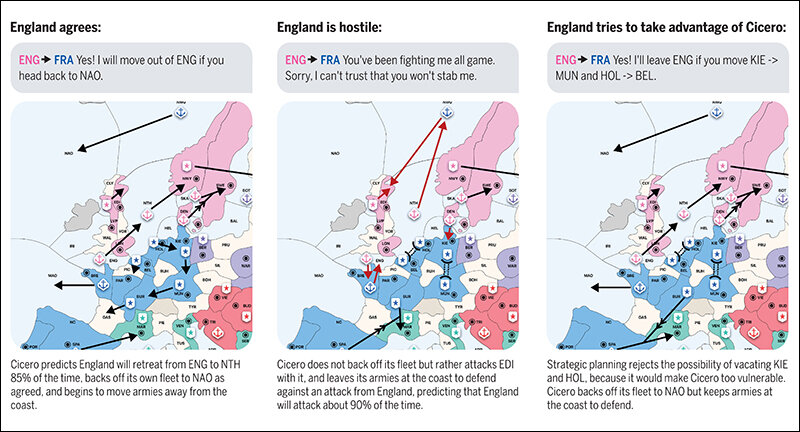

Making and understanding communication is acute in Diplomacy. The AI system sent and received an average of 292 messages per game each involving “coordinating precise plans, and any miscommunication can result in their failure.” The other challenge is also that players had to build trust with each other in an environment that rewards players not trusting one another and success depends on figuring out when and how a player may be lying and choosing whether one wants to do the same or adopt another technique.

Diplomacy was hence a challenge for Cicero not only because it had to learn a language, but also to understand what others were saying including contexts hidden in them, and analyze it to make decisions.

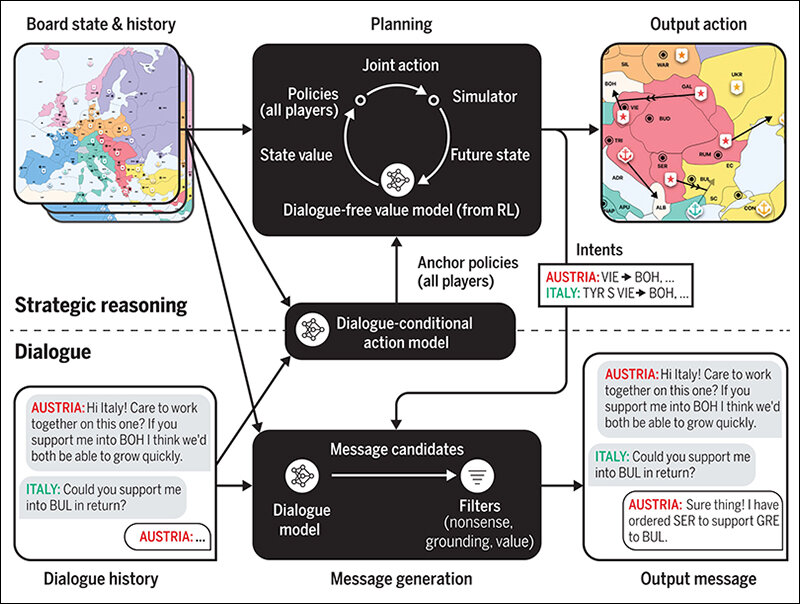

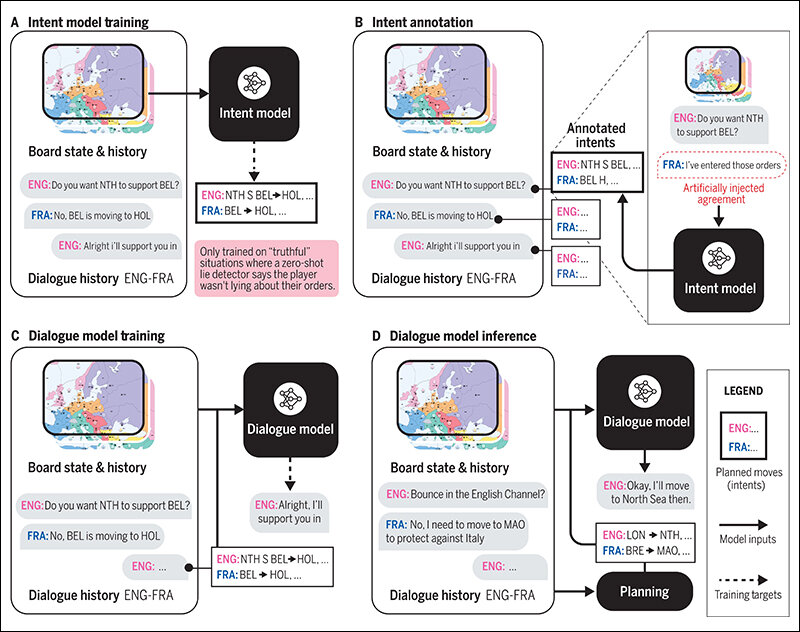

Cicero’s Logic

How Cicero played the game was, as expected, logically and step-by-step. First it tried to predict the moves that the other players will make. These predictions became the basis for its next move. Based upon these, Cicero tried to figure out who among the other 6 players it needed to talk to, what it needed to talk about, initiate conversation and negotiate to convince or cooperate with that player.

What was so beautifully unique about Cicero is that in all its negotiations, it always employed the principle of good faith. It did not go about it with malicious intent and yet was so successful. It is almost like Cicero played against the rules of the game, succeeding against the tide.

Why Cicero’s success is a Big Deal

In the game, players are allowed to do whatever they want: lie, cheat, withhold information, backstab each other, hide true motives, manipulate, etc., just like politicians. Most players employ most of these tricks. This is where what Cicero did was spectacularly fascinating: the only trick it used, was to withhold information. It did not overtly lie, cheat, backstab another player, manipulate or hide its true motive. And though it did not win outright, that it came in the 10 percentile of the top is, frankly, stupendous. There are two reasons for saying so.

AI, thanks to over five decades of cinematic programming, is thought of as evil. This, in truth, is baseless, harking back to the old adage: we fear that which we don’t understand. Even people who make most of their money with AI like Elon Musk, rake up needless fears about the technology. Thus, to see an AI – which even when it had the option – did not resort to those evil strategies which frankly humans are masters of and acted in good faith is a huge deal in the growth of AI.

Ethics is the cornerstone of AI. Asimov’s three laws of robotics are the starting point of the same. Hence the ethics training that the Meta engineers gave to Cicero, could be the most important takeaway to be replicated in AI systems worldwide.

The second reason has more to do with humans than AI. If an AI can play with multiple players in a game where cheating is not only permitted but encouraged and where other players resorted to it, and come in the 10 percentile, this says a lot about both politics and the art of success in the world.

Most of us would have heard endlessly that in a dog-eat-dog world, only the ruthless succeed. But if in the most ruthless game of all, a system can succeed without being ruthless, it is a positive development for all humanity. Those who are cruel out of fear of being left behind or trampled upon, need not do so. That goodness may take time but will not fail utterly miserably even when others are miserable around you, is a huge outcome of this experiment to me.

If these principles are applied in the actual art of negotiation, politics, and international diplomacy, it might make the world a really good place to live, even when most people don’t operate in good faith.

What the Cicero researchers have achieved, could herald a new hope for humanity, a new dawn for AI. But it would depend on how we use it. From goodness, AI could also be turned towards evil. In an age of a steady stream of problems, humanity’s hope might rest, on what we chose to do with our AI.

In case you missed:

- Rethinking AI Research: Shifting Focus Towards Human-Level Intelligence

- AI as PM or President? These three AI candidates ignite debate

- Kodak Moment: How Apple, Amazon, Meta, Microsoft Missed the AI Boat, Playing Catch-Up

- Are Hallucinations Good For AI? Maybe Not, But They’re Great For Humans

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- Why is OpenAI Getting into Chip Production? The Inside Scoop

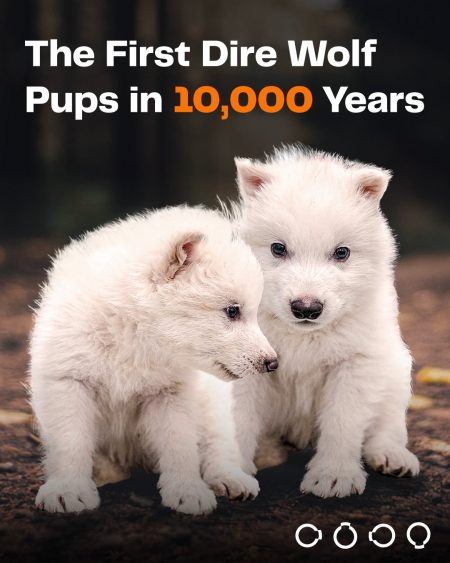

- A Howl Heard Worldwide: Scientific Debate Roars Over an Extinct Wolf’s Return

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?

- Rufus & Metis Tell Tales of Amazon’s Delayed AI Entry