For AI to reach its full potential as a powerful helper in all our tasks, the system that needs to outshine others is one few know about – LiDAR, writes Satyen K. Bordoloi.

The first human directly killed by AI was Elaine Herzberg. On 18 March 2018, she was crossing a 4-lane highway with her bicycle when an autonomous vehicle (AV) i.e., one driven by AI, hit her. An AV has a complex system of cameras and sensors making sense of what’s around. In this case, the main malfunction was its LiDAR system.

AI cannot think. Or see. Or understand. What we consider ‘thinking’ is mere mimicry. Thousands of human workers over months and years train AI to do what it finally does, expecting that once released, it’ll learn and evolve on its own, something that’s proving incorrect. One of the biggest problems in training AI systems in AVs mainly, is how to make onboard computers see. You may think it’s easy. But this field – called computer vision – is treacherously tricky.

WHAT IS COMPUTER VISION:

This is basically everything you do to give a digital system the ability to see and interpret the world. ChatGPT, Bard and other generative AI systems are blind. They do not need to see what’s around them, just regenerate words based upon their dataset, training and query. For Bard or ChatGPT to tell you what the photo behind your chair is, you’d need to take its snapshot, upload it into their systems, the AI will run a search and tell you. Yet, it can’t tell you how far that photo is from your chair. Or if the frame is made of wood or metal.

This is fine for Bard or ChatGPT. But if you needed to build a sophisticated AI system like an AV or say one for blind people to use, depth becomes key. The smart glass that the blind girl wears has multiple cameras and sensors that not only have to interpret what’s in front of her but also have to tell her how far the car on the left is, at what speed it’s approaching and if that would be enough to cross the road.

If she could see, she’d have known without thinking that the car is about 50 meters out and watching it for a second, she’d have known its velocity enough to calculate that she could safely cross. All of us make such decisions every moment of our lives without thinking. It is when we try to make AVs ‘see’, that we realise how complex such small decisions are, and how life-altering they could be.

To do this, the AI system would need not only to ‘see’ but view the surroundings in 3D. This is where LiDAR comes in.

(Video Credit: Wikipedia)

LiDAR –THE EYE OF THE AI:

LiDAR stands for Light Detection And Ranging. It sounds similar to RADAR – Radio Detection And Ranging because both do similar things: detect the presence and volume of distant objects. The major difference is their wavelength and thus what and how much they capture. RADAR uses radio waves and LiDAR light waves. RADAR thus has a wider beam divergence i.e., it can detect objects far off, while LiDAR has a narrower but more focussed beam and thus range.

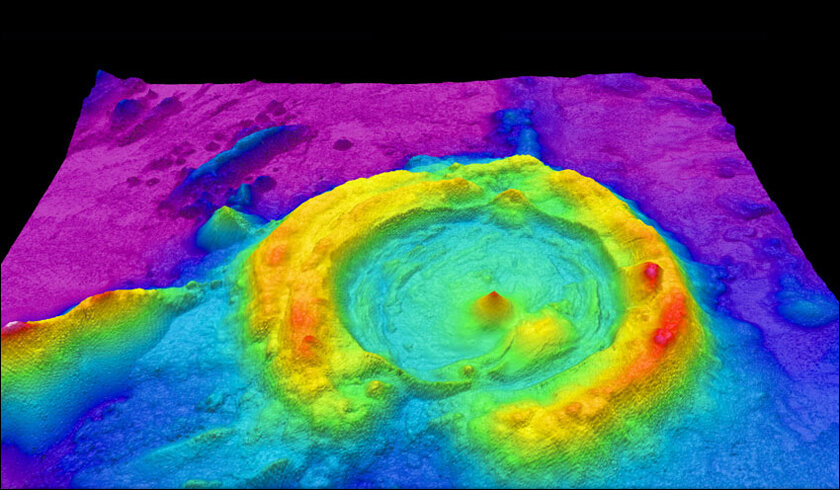

LiDAR is thus more accurate and can provide more detailed 3D mapping while RADAR is more versatile and can operate at a wider range of conditions.

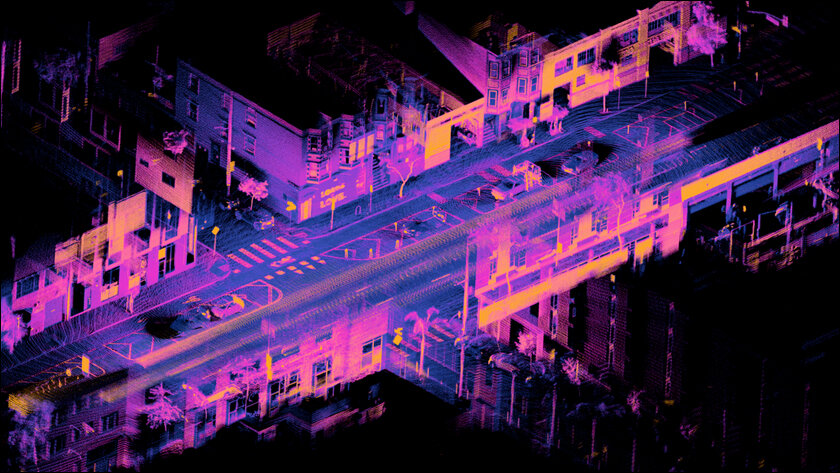

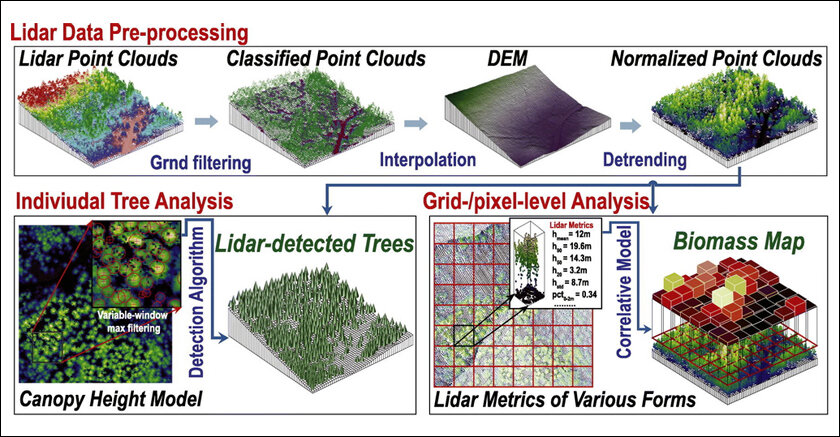

LiDAR works by emitting pulsed light waves into the surrounding area. If it encounters obstructions, the light bounces off and returns to the sensor which then calculates the time it took for each pulse to return and thus the distance. The system does this millions of times per second to create a real-time 3D map of the environment which is called a point cloud. In an AV, the computing system on board utilizes this LiDAR point cloud to know what is around and thus safely navigate the vehicle.

LiDAR can detect not only how far the object is, but depending on the intensity of the reflected light, estimate what it’s made of. E.g., metal will reflect the laser back almost in its entirety while wood would absorb some. This, along with analysing snaps from a normal camera that it runs through its programming, can make an AV accurately predict what that thing is.

In a normal AI navigation system like an AV, there are about 30 sensors including cameras that help it navigate through the world. Right at the highest point of the vehicle, throwing light beams into the surroundings, is the LiDAR. Alongside RADAR, it has multiple cameras, sonar, inertial measurement units, GPS, odometers, wheel encoders etc.

In a moving self-driving car, the data from these multiple sensors and cameras are taken on the go and analysed in real time. The faster the car, the less time its onboard computers have to make calculations. Ideally, because of the risk involved, it should have more time for greater accuracy. Sadly it’s the reverse.

The smart lens a blind person wears would need to do what an AV does, i.e. ‘see’ its surroundings using these sensors, including in 3D. Using generative AI systems like Bard or ChatGPT, it can then describe the same to the wearer and tell them what is around or warn of dangers. Thus, at a party – a blind person would be a better charmer than one with eyes as their AI system not only tells them the names of each person but describes what they are wearing, helping the person joke or draw attention to it.

WHY LIDAR IS THE FUTURE:

The world, either too satisfied or alarmed by the likes of ChatGPT, does not realise the future scope of AI. AI is already being used in most industries, businesses and work. Yet, its most profound use will be in our personal lives as our personal assistants in our devices that help us do most jobs, making us more productive.

We’d want AI systems to help us in the real world. But the AI system can not understand the real world unless it has a sound LiDAR system on it and the corresponding Machine Learning tools backing it up. Every single device we use AI on would need LiDAR. Think about it, what good would a personal assistant on your mobile be, if after you point it over your shoulders to see if your girlfriend is following you, can’t even tell from the speed of her steps and her expressions that she’s angry.

LiDAR, besides the camera, is the critical tool in AI’s arsenal for its full actualization and for it to live up to its promise to humans.

The current AI and ML tools on our phones do a lot. They can translate a signboard. Turn speech into written words. Tell what tree it is if you point the camera. And with generative AI features like ChatGPT and Bard installed, it can literally tell these things in our ears through a Bluetooth headset. But it can’t tell how far your car is parked when after a heavy drinking session, you stagger out of the pub. Or prevent you from driving it after analysing how long you took to walk the 10 meters to your car, and the zig-zag path you took. If you had LiDAR on your phone – like an iPhone and iPad does – it could do those calculations, perhaps saving your life in the process as it’d book you an Uber instead.

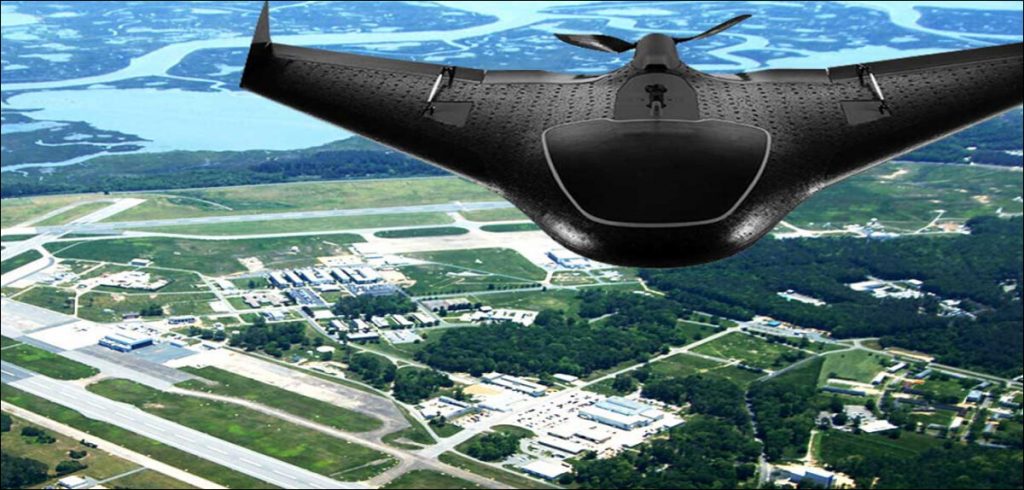

LiDAR can be useful in a variety of fields other than in personal assistance. Besides self-driving cars, it is a key tool in mapping the environment to create detailed maps. On a drone, it can quickly make a 3D map of a place you’re interested in – proving to be a vital tool in everything: from archaeology to disaster response, policing and security to a battlefield.

Like inside AVs, LiDARs are indispensable in robots. The robot servant in every household – the staple of science fiction – won’t come true without LiDAR systems. They are also vital in any training simulation. 3D models created with the help of LiDAR can be key for design and manufacture, even in remote surgery or diagnosis of patients. They are becoming indispensable in agriculture to analyse crop yield.

The uses of LiDAR are so varied and wide, it is limited only by our imagination. The Eye of the AI, like The Eye of the Tiger, pushes not just Rocky Balboa, but all of humanity up the hard terrain of efforts into the heights of fulfilment.

In case you missed:

- Beyond the Hype: The Spectacular, Stumbling Evolution of Digital Eyewear

- Building AGI Not as a Monolith, but as a Federation of Specialised Intelligences

- NVIDIA’s Strategic Pivot to Drive our Autonomous Future with Innovative Chips

- The Digital Yes-Man: When AI Enabler Becomes Your Enemy

- Alchemists’ Treasure: How to Make Gold in a Particle Accelerator (and Why You Can’t Sell It, Yet)

- AI Browser or Trojan Horse: A Deep-Dive Into the New Browser Wars

- 75 Years of the Turing Test: Why It Still Matters for AI, and Why We Desperately Need One for Ourselves

- AI’s Looming Catastrophe: Why Even Its Creators Can’t Control What They’re Building

- Bots to Robots: Google’s Quest to Give AI a Body (and Maybe a Sense of Humour)

- To Be or Not to Be Polite With AI? Answer: It’s Complicated (& Hilarious)