The pattern recognition power of AI is being harnessed across the world to predict crimes finds Satyen K. Bordoloi as he discovers that despite their potential, these come with inherent risks

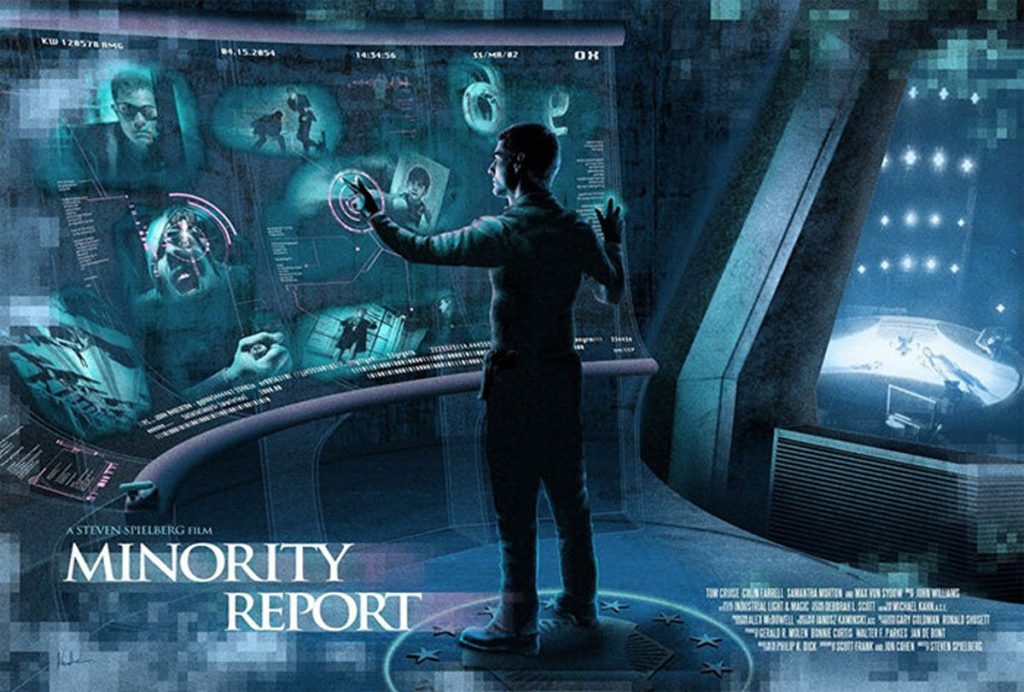

The 2002 sci-fi film Minority Report sees Los Angeles in 2054 free of murders as its pre-crime bureau can predict murders. Cops arrest murderers right before they kill the victims. The absurdity explored in the film is: how can you call it murder if you’ve successfully prevented it?

As with many a science-fiction narratives, much of the technology shown in the film didn’t exist at the time. However, two decades into the future one piece of tech does exist: the ability to predict crimes. From Sydney to London, Rio de Janeiro to Kanagawa, Cape Town to Los Angeles itself, Artificial Intelligence has been deployed to predict and thus prevent crimes.

Call it director Steven Spielberg’s precognition that within years of his film Minority Report, the Los Angeles Police Department became one of the first cities in the world to use a crime predicting program called PredPol created by UCLA scientists. The intention was to see how crime data can be scientifically analysed to spot patterns of criminal behaviour.

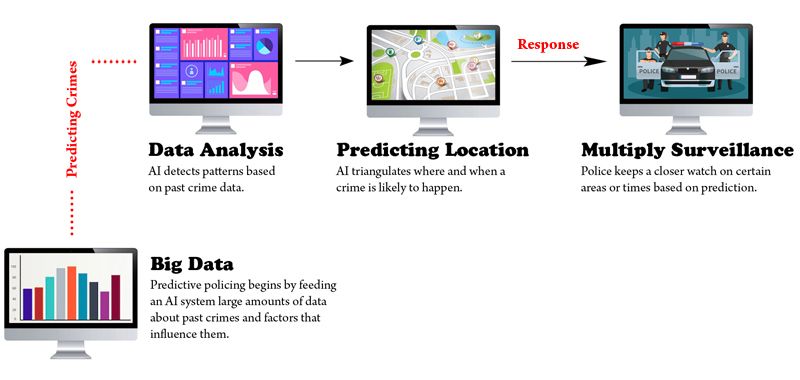

As per the PredPol website, the program uses a “machine-learning algorithm to calculate predictions” using “3 data points – crime type, crime location and crime date/time” and that it “uses a data-driven approach to direct patrol operations” and to “predict where and when specific crimes are most likely to occur” to help cops “proactively patrol to help reduce crime rates and victimization.”

If it sounds eerily like Minority Report, it indeed is to an extent. Like PredPol in the US, there’s CrimeScan in Australia, CrimeRadar in Brazil, POL-INTEL in Denmark besides literally hundreds of other adaptive and highly customizable AI algorithms using Machine Learning and Deep Learning to predict crimes in cities across the world.

That brings us to the most important question in this context:

Is crime predictable?

Daniel Neill, a computer scientist at Carnegie Mellon University and the man behind CrimeScan, told The Smithsonian Magazine that the idea behind using AI to predict crime is to track sparks before a fire breaks out: “We look at more minor crimes. Simple assaults could harden to aggravated assaults. Or you might have an escalating pattern of violence between two gangs.”

Their main idea was that in certain ways violent crimes, like viral diseases, break out in geographic clusters and that lesser crimes are a marker for more violent ones. Thus their algorithm is fed on ‘indicator data’ that includes crime reports, simple assaults, vandalism, disorderly conduct, 911 calls about shorts fired or person seen with weapons, trends of violence on days of the week, etc.

PredPol gives an example in their website. If a house is broken into one day, the risk of it being broken into on another day goes up because offenders have been known to return to places where they have been successful before. Thus not just the burgled house, but one adjoining it is at a greater risk of being broken into again. Thirdly, police know that offenders don’t usually go too far from the area they usually operate in meaning ‘crimes tend to cluster together’.

PredPol thus links “several key aspects of offender behavior to a mathematical structure that is used to predict how crime patterns will evolve.” It even shows the actual patented algorithm they use to do these.

What is predictive policing?

Predictive policing is thus defined as the “application of analytical techniques- particularly quantitative techniques-to identify likely targets for police intervention and prevent crime or solve past crimes by making statistical predictions.” Unlike humans, AI is great at pattern recognition as they do not tire and if the data is fed correctly, can’t fail. This gives law enforcement agencies the opportunity to anticipate and thus prevent crimes.

This is done by using many different types of available applications and data sources, including live ones like biometrics, facial recognition, smart cameras, and video surveillance systems. A Deloitte study concluded that the use of AI in predictive policing could help cities reduce crime by 30-40% and response times of emergency services by 20-35% percent.

As per the AI Global Surveillance (AIGS) Index 2019, 56 countries used AI in surveillance for safe city platforms. Their use is only increasing and India is no stranger to it either with almost all major cities in India beginning to use AI in their policing activities. It is only fair to predict that like their western and far-eastern counterparts, India too would get into predictive policing soon enough.

The problems with predictive policing

In the film Minority Report, the protagonist played by Tom Cruise who believes in the infallibility of the system is forced to doubt it when it predicts that in the next few days, he will kill a person he doesn’t even know.

In real life, the problems are much worse. It begins with the data fed into it. The data collected by prejudiced people will inherently be so. Racist white cops in the US disproportionately misidentify African-American citizens with having criminal intent evident from the George Floyd case among hundreds of others. The AI systems fed on such faulty data, will themselves generate false or unreliable alerts.

Another big flaw with such a prediction is that it relies on neglecting the simple fact that humans are incredibly complex and unpredictable. No matter how many data points of the past are considered, it is impossible to accurately predict human behaviour. Thus if individual behaviour is hard to predict, how can you do so for entire communities and cities. And what about the potential for rehabilitation of even those with past records. A system based on past patterns will thus unfairly punish people for past aggression even if they have reformed.

Thus most experts agree that due to the complexity of both human nature and diverse contexts, it is impossible to design AI systems that are fully ethical.

Thus the adoption of predictive policing using AI systems – something that is becoming inevitable – has to be done together with a discussion about ethics and regulations. No matter how attractive they seem, civil liberties and human rights need to be protected using proper regulations.

Minority Report ends with the world realising how flawed the predictive system can be and thus instead of expanding, it is shut down. That is not how it is panning out in real-life. Predictive policing is too promising for a large part of the world to abandon it completely. Hence, our best protection is to regulate these systems effectively.

Predictive defense is the best offense against any possible misuse of predictive policing systems.

In case you missed:

- Rethinking AI Research: Shifting Focus Towards Human-Level Intelligence

- AI as Cosmic Cartographer: Teen’s Discovery Illuminates Positive Power of Artificial Intelligence

- AIoT Explained: The Intersection of AI and the Internet of Things

- Tears of War: Science says women’s crying disarm aggressive men

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- India’s Upcoming Storm of AI Nudes & Inspiring Story Of A Teen Warrior

- Generative AI With Memory: Boosting Personal Assistants & Advertising

- And Then There Were None: The Case of Vanishing Mobile SD Card Slots

- The Rise of Personal AI Assistants: Jarvis to ‘Agent Smith’

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?