What makes it scarier is the fact that it may be you that ChatGPT decides to write a fake sexual allegation about next, probably why Musk along with others has been calling for a pause in AI development, says Nigel Pereira.

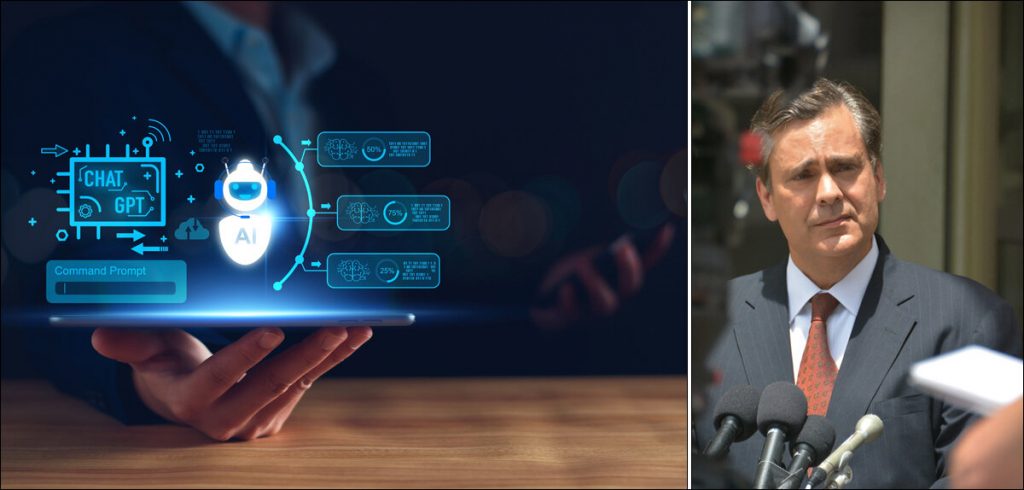

About a month ago, the much-talked-about AI chatbot ChatGPT “invented” a sexual harassment scandal and named an actual person as the accused. What followed was a tumultuous week for Jonathan Turley, a professor of law at George Washington State University who apparently has enough problems due to his political views. While he states on his blog that he has come to expect death threats against himself and his family because of his conservative legal opinions, false allegations of sexual harassment are the last thing he, or anyone else for that matter, needs. Additionally, while articles written by people can be corrected by contacting a journalist or an editor, with AI and the black box that is ChatGPT, there is literally no one that can be held accountable.

AI Adjudication

It all started when UCLA professor and fellow lawyer Eugene Volokh asked ChatGPT if sexual harassment was a problem in American law schools and to provide at least 5 examples and quotes from relevant articles. What’s scary about the response is that not only did ChatGPT name Turley as a sex offender but also provided details as well as quotations from newspaper articles that don’t even exist. That’s right, ChatGPT quoted a non-existent Washington Post article from 2018 to suggest Turley not only made inappropriate comments to a student during a school trip to Alaska but also tried to touch her in a sexual manner. This led Volokh to email Turley about this particular response which Turley found comical till the implications actually sank in.

The fabricated Washington Post quote states Turley was a professor at Georgetown University Law Center, a faculty that Turley has never taught at, in addition to the fact that he hasn’t been to Alaska on any school trips and has never been accused of sexual misconduct by anyone. Why then would an AI-powered chatbot go out of its way to tarnish the reputation of an innocent person? According to Turley, AI chatbots can be as biased as the people who create them. An example would be the fact that while ChatGPT will gladly make jokes about men, jokes about women are deemed derogatory and demeaning. Similarly, while jokes about Christianity or Hinduism are acceptable, jokes about Islam are not, leading a lot of people to believe ChatGPT reflects heavily on the bias of its developers.

Hallucinogenic GPT

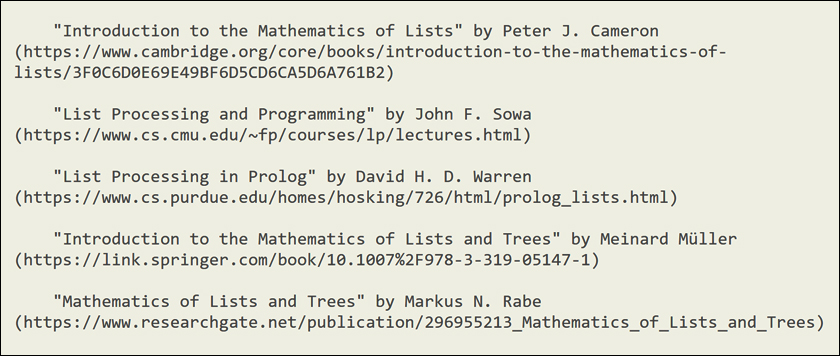

Another possible reason is the fact that ChatGPT is known to “hallucinate,” a term that refers to ChatGPTs habit of basically making stuff up or fabricating information out of thin air. If you’re still wondering how ChatGPT could quote a Washington Post article that doesn’t exist, below is ChatGPTs response to a person asking for references that deal with the mathematical properties of lists.

While it all looks pretty legit, each and every link is fake and either doesn’t exist or will redirect to a completely unrelated page. This has led to ChatGPT being called a “bullshit generator,” by Princeton University computer science professor Arvind Narayanan.

Machine Learning Lawsuits

Earlier last month, Brian Hood, a regional Australian mayor said he is thinking about suing OpenAI over ChatGPTs false claims that he has spent time in prison. If he does, it will be the first defamation lawsuit against a chatbot. Similarly, Kate Crawford, a professor at USC Annenberg was contacted by a journalist who was directed by ChatGPT into believing she was one of the top critics of Lex Friedman’s podcast and while links and sources were provided, they were all fabricated by AI. Yet another fake citation incident involves journalists at USA Today who discovered that ChatGPT had come up with fake citations and sources to prove that access to firearms doesn’t increase child mortality rates in America.

What’s really troubling, however, is the fact that while ChatGPT does make up fake citations like the ones posted in the picture above, the authors’ names are all real. This means that there are actual scientists, writers, and journalists whose names are being attached to fake citations so if you’re a person who is considered an authority on a particular subject, it could be you that ChatGPT decides to impersonate next, scary thought to say the least. Even scarier is the fact that it may be you that ChatGPT decides to write a fake sexual allegation about next, which is probably why Elon Musk along with others has been calling for a pause in AI development.

In case you missed:

- Could Contact Lenses be the Key to Fully Wearable BCIs?

- Age reversal technologies in 2024, longevity escape velocity by 2029?

- From Fridge to Fusion Reactor, How Mayonnaise is Facilitating Nuclear Fusion

- This computer uses human brain cells and runs on Dopamine!

- Researchers develop solar cells to charge phones through their screens

- These AI powered devices add smells to virtual worlds

- Scientists gave a mushroom robotic legs and the results may frighten you

- So AI can get bored, “suffer,” and even commit suicide?

- Training AI for Pennies on the Dollar: Are DeepSeek’s Costs Being Undersold?

- AI in 2025: The Year Machines Got a Little Too Smart (But Not Smart Enough)