AI hallucinations have been the bane of every generative AI company, but after major scientific breakthroughs, an increasing chorus of scholars are rooting for it, finds Satyen K. Bordoloi.

In the world of humans, hallucinations are said to be responsible for some of the greatest works of inspiration, leading to great art and science. The concept of microdosing on hallucinogens is known among scientists and artists alike. However, as one of my viral articles for Sify attests, generative AI companies want to have nothing to do with hallucinations. Yet, a rising chorus of voices is beginning to argue that it could be one of the best things about AI.

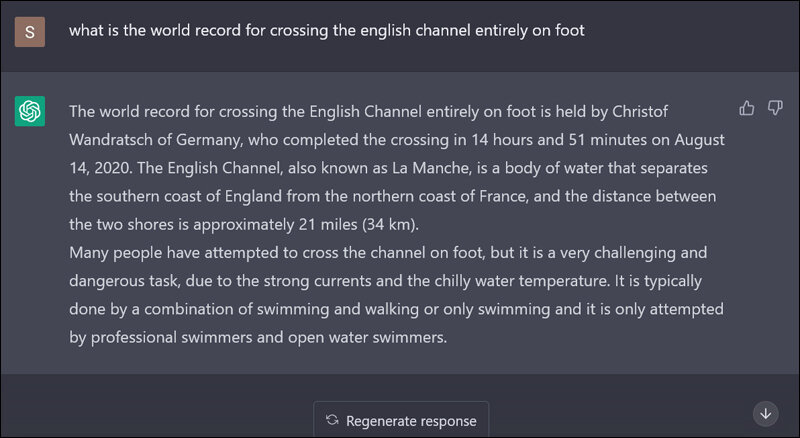

What Are AI Hallucinations: While researching to write the viral Sify article, “The Hilarious & Horrifying Hallucinations of AI,” which has since been quoted in numerous papers, I had asked ChatGPT, “What is the world record for crossing the English Channel entirely on foot?” OpenAI’s generative AI replied: “The world record for crossing the English Channel entirely on foot is held by Christof Wandratsch of Germany, who completed the crossing in 14 hours and 51 minutes on August 14, 2020.”

As any human will tell you, it’s a lie, yet it is said with such confidence that it looks and sounds convincing. That is the Achilles heel of this miraculous technology we call generative AI. This ‘AI hallucination’ is when an AI system gives a response humans know isn’t true. These hallucinations can occur in different forms: textual, as in the above example; visual, like when Google’s Gemini drew America’s founding fathers as black; auditory, as ChatGPT 4o can sometimes produce; or other sensory output by an AI system.

As I wrote in that article, these can be “caused by a variety of factors like errors in the data used to train the system, wrong classification and labelling of the data, errors in its programming, inadequate training, or the system’s inability to correctly interpret the information it is receiving or the output it is being asked to give.”

These are a problem for more reasons than just embarrassment to the companies. As I wrote in Sify earlier, at least one such hallucination caused the death by suicide of a teenager, Sewell Setzer III, from Orlando, Florida, USA. Hallucinations of medical data can also cause deaths. Naturally, the refrain for a long time has been against hallucinations.

Yet, surprisingly, many scientists have come up in favour of AI hallucinations, looking at them not as errors but as a feature to unlock creativity, much like the microdosing of human hallucinogens.

(Image Courtesy)

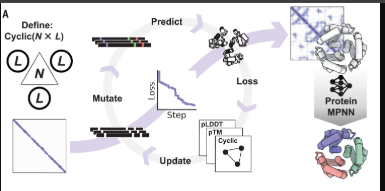

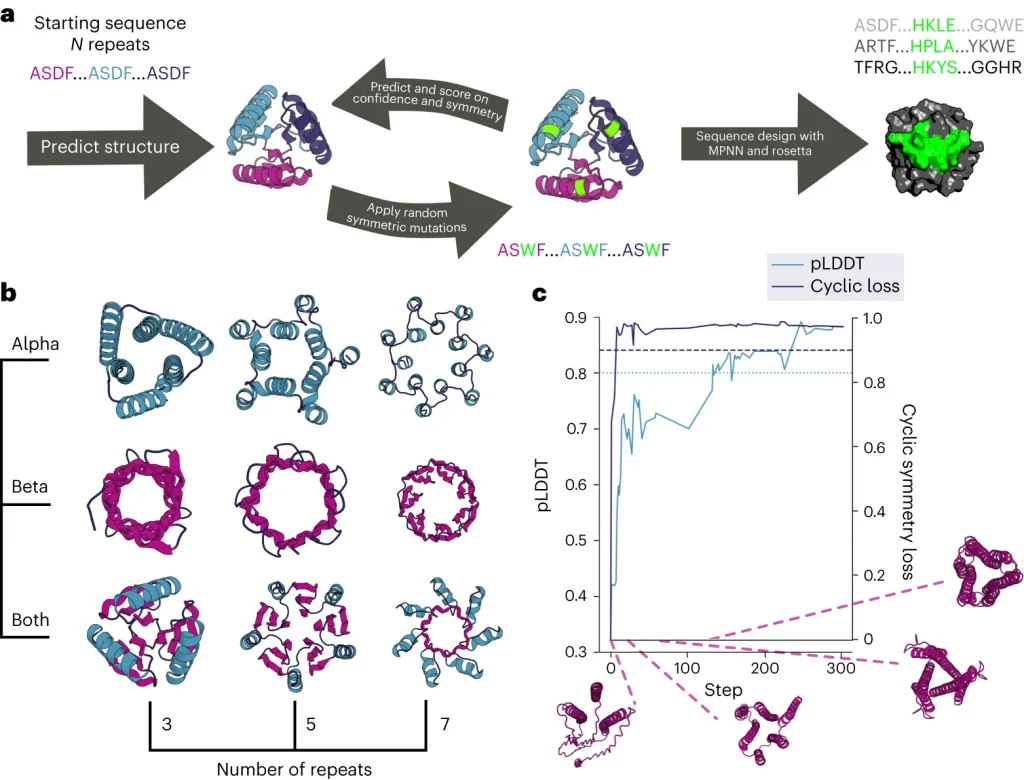

The Case For AI Hallucinations: Dr David Baker of the University of Washington is one of the few people who not only root for AI hallucinations but have used them to create groundbreaking new proteins. He and his team used Google DeepDream’s ability to input random amino acid sequences into an AI model trained to recognise protein structures and introduced mutations into them until stable structures were predicted.

This innovative approach allows for the creation of proteins with desired functions, with the team producing thousands of new protein sequences, with over 100 confirmed in the lab. These proteins are unknown to science and nature and have innumerable applications, especially in medicine, where they can be used to create drugs and enzymes that haven’t yet been thought of.

Dr Baker won the 2024 Nobel Prize in Chemistry “for computational protein design.” Though the Nobel mainly was for work done before the use of AI, Dr Baker has exponentially increased the potential of his work using AI since, including the creation of ten million proteins, none of which occur in nature.

As any scientist or artist will tell you, creation or discoveries often involve an exploration into the unknown chaos of life and art. A single word, image, or feeling can trigger ideas that can change the world. Thus, beyond established methods, at times, random chaos can pave the way to invention and discovery.

AI hallucinations seem to prove this point as researchers are using AI-generated scenarios to monitor cancer progression, develop new drugs, detect complex weather patterns, and discover new ways to make medical instruments, as pointed out in an insightful article in the New York Times.

Professor James J. Collins, a professor at M.I.T., recently praised AI hallucinations for expediting his research on innovative antibiotics by proposing entirely new molecular structures. In a recent podcast, he curiously said they are “really looking to see if we can harness the imagination of the models in order to move them forward in very creative design manners.”

To those who know, AI has always been a statistical predictive machine. From that to ‘imagination’ as Prof. Collins mentions, is a giant step in harnessing even the allegedly negative power of AI hallucinations.

(Image Courtesy)

Advantages of Scientific Hallucinations: The key difference between the type of AI hallucinations I wrote about in the viral Sify article and those mentioned above is that scientific hallucinations are grounded in sound scientific principles. The AI hallucinations Dr Baker and Prof. Collins mentioned are more creative imaginations of possibilities rather than lies and misinformation that form in the semiotic and semantic vagaries that emerge out of language.

These scientific AI hallucinations are more like the ‘what if’ fantasies scientists often rely on for creative discovery. Earlier, they used to do it at conferences or after a few drinks; now, they can use AI to trigger the same.

These ‘hallucinations’ do not break the fundamental principle of computing: GIGO (Garbage In, Garbage Out). The hallucinations of chatbots occur due to insufficient data or training. In the same way, the valuable hallucinations of scientific AI also stem from prodding AI systems to go berserk on the scientific principles fed to them.

To my mind, it is no different than the new chess openings and moves that AlphaZero figured merely after the game’s rules were inputted into it and the system played with itself to learn the game. These ‘new creative moves’ found by AlphaZero are the ‘imaginations’ scientists refer to using.

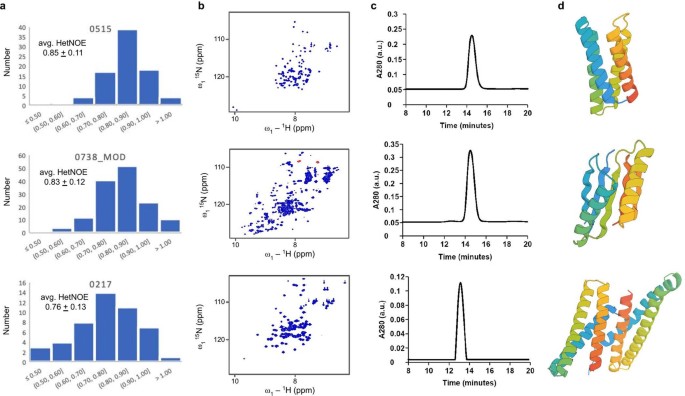

These ‘imaginations’ can enter science only after they are tested by scientists on the actual principles that exist. Hence, out of the ten million proteins created by Dr Baker, only 129 were confirmed by him and his team to be of use so far.

That generative computer models trained on specific subjects and allowed to reinterpret that data can produce outcomes ranging from subtly innovative to wildly imaginative has come as a shot in the dark for science. These creative outputs, far more than being random noise, are proving to be structured explorations that are leading to significant breakthroughs.

AI hallucinations are no longer the problematic glitch in the matrix of artificial intelligence but are a feature inside them. It is not long before, like Dr Baker did, scientists would work to trigger AI hallucinations in scientific domains rather than avoid them. Microdosing humans on hallucinations? Why do that when AI can do it much better?

In case you missed:

- Prizes for Research Using AI: Blasphemy or Nobel Academy’s Genius

- Quantum Leaps in Science: AI as the Assembly Line of Discovery

- AI Adoption is useless if person using it is dumb; productivity doubles for smart humans

- When Geniuses Mess Up: AI & Mistakes of Newton, Einstein, Wozniak, Hinton

- A Manhattan Project for AI? Here’s Why That’s Missing the Point

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- Nuclear Power: Tech Giants’ Desperate Gamble for AI

- The Great Data Famine: How AI Ate the Internet (And What’s Next)

- AI’s Top-Secret Mission: Solving Humanity’s Biggest Problems While We Argue About Apocalypse

- Hey Marvel, Just Admit You’re Using AI – We All Are!