A recent study confirms what should have been common sense – effective AI needs natural intelligence. Satyen K. Bordoloi explains how this finding reinforces AI’s role as a powerful tool.

We all have a friend who bought an expensive DSLR camera, thinking it would automatically make them the next Annie Leibovitz. Or that uncle who purchased a top-of-the-line gaming PC only to use it for sending emails? Well, turns out the same principle applies to artificial intelligence – all the computing power in the world won’t help if you don’t know what you’re doing.

A groundbreaking study by Aidan Toner-Rodgers at MIT finally puts numbers to what should have been common sense: AI is just a tool, albeit a sophisticated one, and like any tool, its effectiveness depends entirely on who’s wielding it. The research, conducted in a large (unnamed) U.S. firm’s R&D lab, reveals a stark truth that should help anyone wondering whether they should invest in AI tools for their business: while top scientists nearly doubled their output with AI assistance, the bottom third of researchers barely saw any improvement. It’s like giving a Ferrari to someone who can barely drive – impressive but ultimately pointless.

The Tale of Two Scientists: Picture this: two scientists walk into a lab (no, this isn’t the start of a bad joke). Both are given access to the same cutting-edge AI tool for materials discovery. The first scientist, armed with years of experience and deep domain knowledge, uses the AI like a master conductor leading an orchestra – directing it to generate promising compounds, quickly identifying which suggestions are worth pursuing, and efficiently filtering out the duds.

The second scientist treats the AI like a lottery machine, randomly testing its suggestions with all the discrimination of a toddler in a candy store. The result? While the first scientist’s productivity soars by nearly 100%, the second scientist’s output barely budges. It’s a classic case of what we know in computing as GIGO: garbage in, garbage out – except now we’re doing it with billion-parameter models.

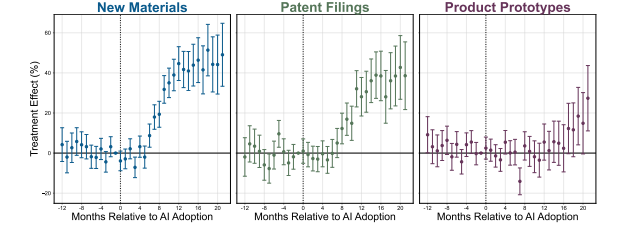

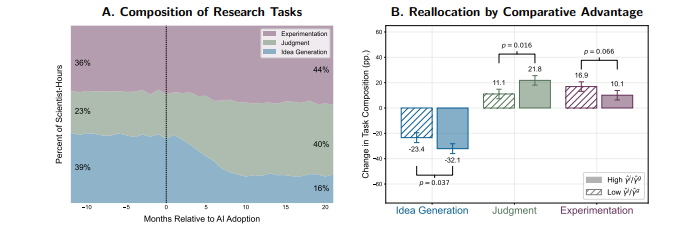

Numbers Don’t Lie (Even If AI Sometimes Does): The study’s findings are as clear as a silicon crystal: “AI-assisted researchers discover 44% more materials, resulting in a 39% increase in patent filings and a 17% rise in downstream product innovation. These compounds possess more novel chemical structures and lead to more radical inventions.” But here’s where it gets interesting: the technology automated 57% of “idea-generation” tasks, essentially turning scientists into professional idea evaluators. It’s like being promoted from chef to food critic – you’re no longer cooking the meals, but you better know what makes a good dish.

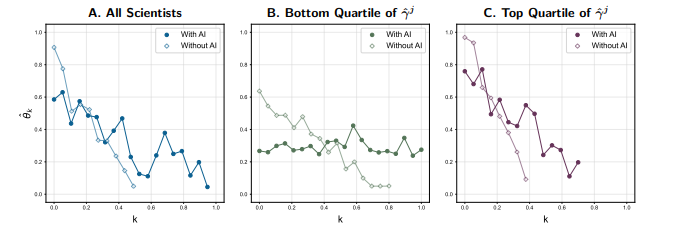

And therein lies the rub. Top scientists leveraged their domain knowledge to prioritise promising AI suggestions, while others wasted resources testing false positives. It’s akin to having a brilliant research assistant who generates thousands of ideas – if you can’t tell the good from the bad, you’ll spend your days chasing wild geese wearing lab coats.

The Great Divide: Perhaps the most striking finding is how AI widened the productivity gap between researchers. The technology acted like a career amplifier – making the good scientists better and leaving the struggling ones… well, still struggling. It’s as if AI handed everyone a megaphone, making the eloquent more articulate while simply amplifying the incoherent babbling of others.

The study reveals that evaluation ability is positively correlated with initial productivity. In other words, good scientists didn’t just become good overnight – they were already good, and AI simply gave them superpowers. It’s like giving a master chef a better kitchen versus giving a novice cook the same space – one will create masterpieces, and the other will just burn things more efficiently.

The Human Touch in a Digital Age: Ironically, as machines get better at generating ideas, human judgment becomes more valuable. Contrary to popular perception, the study concludes that “improvements in machine prediction make human judgment and decision-making more valuable.” It’s a plot twist worthy of a sci-fi novel – in our quest to automate human intelligence, we’ve only made human expertise more crucial, a fact that’s just not appreciated enough.

This shift hasn’t gone unnoticed by organisations. The study reports that the lab eventually fired 3% of its researchers, with 83% of these scientists being in the bottom quartile of judgment. It’s a harsh reminder that in an AI-augmented world, the ability to evaluate and prioritise becomes more important than the ability to generate ideas.

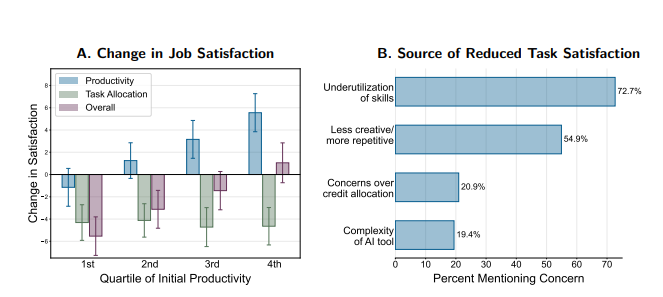

The Other Side of Progress: But it’s not all roses and Nobel prizes. The study also found a 44% reduction in job satisfaction across the board, even among the high performers. Scientists reported feeling less creative and more like idea evaluators than inventors. It’s as if we’ve turned composers into music critics – still important, but perhaps not what they signed up for.

The Bottom Line: The message is clear: AI is not your ticket to instant genius. Like a piano, a paintbrush, or a particle accelerator, it’s just a tool – albeit an incredibly sophisticated one. Its effectiveness depends entirely on its user’s knowledge, judgment, and expertise.

So, before rushing to implement the latest AI solution, organisations should first focus on building human expertise. After all, giving someone ChatGPT without domain knowledge is like giving them a Stradivarius violin without music lessons – you’ll get noise instead of a symphony, regardless of the instrument’s cost.

Ultimately, the study confirms what we should have known all along: artificial intelligence still needs natural intelligence to be truly effective. Perhaps the other side is also true: if a person is endowed only with natural stupidity, AI will enhance that as well. In that sense, AI is our perfect mirror – it shows us who we truly are, not who we presume ourselves to be.

And that, perhaps, is the most human thing about it.

In case you missed:

- Copy Of A Copy: Content Generated By AI, Threat To AI Itself

- Rethinking AI Research: Shifting Focus Towards Human-Level Intelligence

- Rogue AI on the Loose: Can Auditing Uncover Hidden Agendas on Time?

- OpenAI’s Secret Project Strawberry Points to Last AI Hurdle: Reasoning

- Are Hallucinations Good For AI? Maybe Not, But They’re Great For Humans

- Reversing Heart Disease: Next Step in Living 150 Years Achieved in Lab

- Tears of War: Science says women’s crying disarm aggressive men

- AI’s Top-Secret Mission: Solving Humanity’s Biggest Problems While We Argue About Apocalypse

- The Rise of Personal AI Assistants: Jarvis to ‘Agent Smith’

- A Manhattan Project for AI? Here’s Why That’s Missing the Point