An image of Jesus and half a shrimp fused together, a SpaceX UFO craft, tiny children baking impossibly perfect cakes, and well-written articles outlining problems and magical solutions by the end of them.

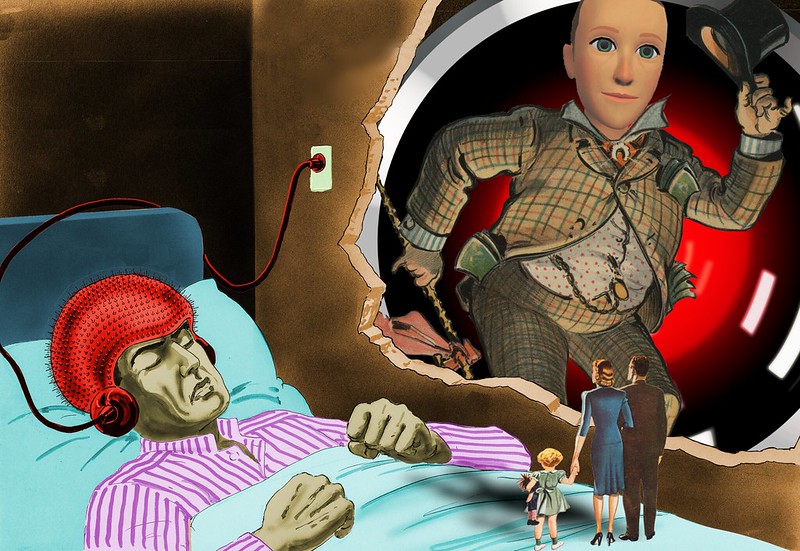

No, you don’t need to imagine them; these images, texts, and media are already available on the internet. Welcome to the world of “Slop,” which is akin to spam and can be described as scammy, low-quality, and dubious garbage generated by AI (artificial intelligence). It encompasses a broad term and refers to unwanted, shoddy AI content in books, art, social media, and, increasingly, internet search results. It’s not exactly disinformation, but rather vague text, inert prose, and weird narratives. And yes, it’s everywhere, but is it that big a problem?

What is slop?

The word slop will make most people imagine images of troughs for livestock being shovelled with piles of unpalatable food. However, the first online content to get labelled as slop was AI-generated content on online message boards. Basically, it mirrors similar terms for huge amounts of low-quality data and information on the internet, like ‘pink slime,’ which describes poor-quality news reports appearing to be local news.

Slop could take any form: videos, images, text, and even complete websites. Moreover, it could also trickle into real life. For instance, in November 2024, thousands of people gathered in Dublin’s city centre for a non-existential Halloween parade, as promoted by a website using fake images, fake reviews, and some real photos of other events with no relation to this one. There was even ‘real-life slop’ in the form of the now-infamous Willy Wonka Glasgow experience. Promoted as an interactive family experience using dreamy AI images, the event was low-quality in reality, starting off but getting cancelled halfway.

That’s not all. Did Google suggest adding non-toxic glue to make cheese stick to a pizza? Is there a Facebook post or a low-priced digital book that’s not the one you were looking for prop up out of nowhere on your feed or search results? All slop.

Why Is Slop A Problem?

In the end, AI-generated slop feeds websites that want to optimise SEO as cheaply as possible. This could affect the way the traffic that news outlets receive and how we engage with information on the web. On the other hand, slop has been compared to email spam, which platforms did become quite efficient at filtering out. Even AI hallucinations, which were prevalent in the very first versions of AI chatbots, have decreased in their later versions, but slop has stayed. The question is: will slop fizzle out or could it possibly degrade the entire information ecosystem?

One valid concern is that since AI-generated text is cheaper, faster, and easier to produce, it will proliferate on the web. Eventually, if it’s input as training data into MLs and LLMs (machine learning and large language models), it could lead to information value and quality getting greatly eroded.

Then, there’s the problem of ‘careless speech,’ a set of risks defined in a paper by academics Sandra Wachter, Chris Russell, and Brent Mittelstadt. According to them, careless speech is AI-generated output that features oversimplified information, subtle inaccuracies, or biased responses passing off, in a confident tone, as the truth. It’s not disinformation, and the aim isn’t to mislead, but rather to sound confident and convincing. After all, the thing that’s most dangerous to society isn’t a liar; it’s a bullshitter. The problem? Careless speech could easily pass under the radar.

Finally, there’s the issue of AI slop masquerading as ‘news’ from AI-generated websites. Such websites could result in sites optimised for SEO to maximise advertising revenue — think Buzzfeed or The Onion on steroids with repeated search terms and clickbait headlines. While most are low-quality clickbait websites publishing content about entertainment and celebrities, others also cover issues like politics, obituaries, and cryptocurrencies.

In February 2024, for instance, a Wired article reported on Serbian entrepreneur Nebojša Vujinović Vujo, who bought abandoned news sites, filled them with AI-generated slop content, and pocketed huge monies in ad revenue.

AI ‘deepfakes’ of Hurricane Helene victims circulate on social media, ‘hurt real people’ https://t.co/UIpRD7vxhv pic.twitter.com/jDJ0Ll0Sci

— New York Post (@nypost) October 5, 2024

Is Slop Here To Stay – and Slay?

Slop stepped into the limelight, if we can call it that when Google incorporated into its US-based search results its Gemini AI model in late 2023. Instead of pointing users toward useful links, Gemini attempted to solve queries directly with an “AI Overview” — a chunk of text atop the results page that best guessed what the users were looking for. This also prompted Microsoft to incorporate its AI into Bing search results. Not surprisingly, Google decided to roll back some features until the problems could be ironed out.

Slop might not seem harmful at first sight, but the problem is that it’s all over the internet — and beyond. Digital platforms have been accused of leaning into slop heavily, and announcements have been made to add features for users to create AI-generated content specifically. Yes, AI can help with efficiency, flexibility, and scalability, but it shouldn’t compromise content, newsroom values, or editorial judgement. Whether they will end up creating engaging, unique posts or filling feeds with digital slop, only time will tell.

In case you missed:

- Can We Really Opt Out of Artificial Intelligence Online?

- How Blockchain Can Solve AI’s Trust Problem

- Everything you need to know about Digital Asset Management

- Should Children Be Talking To AI Chatbots?

- Safe Delivery App and the NeMa Smart Bot: How AI Is Aiding Safer Births Amidst Limited Resources

- Why You Should Use Password Managers in 2025

- Neural Networks vs AI – Decoding the Differences

- Cryptography in Network Security – Concepts and Practices

- Cloud Gaming: A New Era in Gaming

- Crypto Heists: How To Keep Your Cryptocurrency Safe?