NVIDIA has again supercharged the AI landscape with their latest JONaS chip. But why do we need AI-specific chips in the first place, asks Satyen K. Bordoloi.

Earlier this year, a generative AI startup I worked for faced a common dilemma: a shortage of AI chips. Ideally, we should have had several running on an in-house server, but even securing AI chip time as a Software as a Service (SaaS) at a reasonable rate was a struggle.

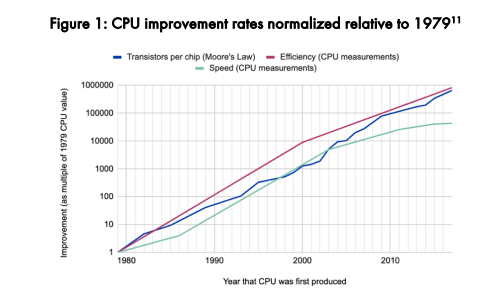

These chips, building blocks of the future and each with more transistors than the total produced in the world during the 1970s, have propelled NVIDIA to unprecedented valuations. Now, NVIDIA has announced a new chip that sold out in most markets before its official release date.

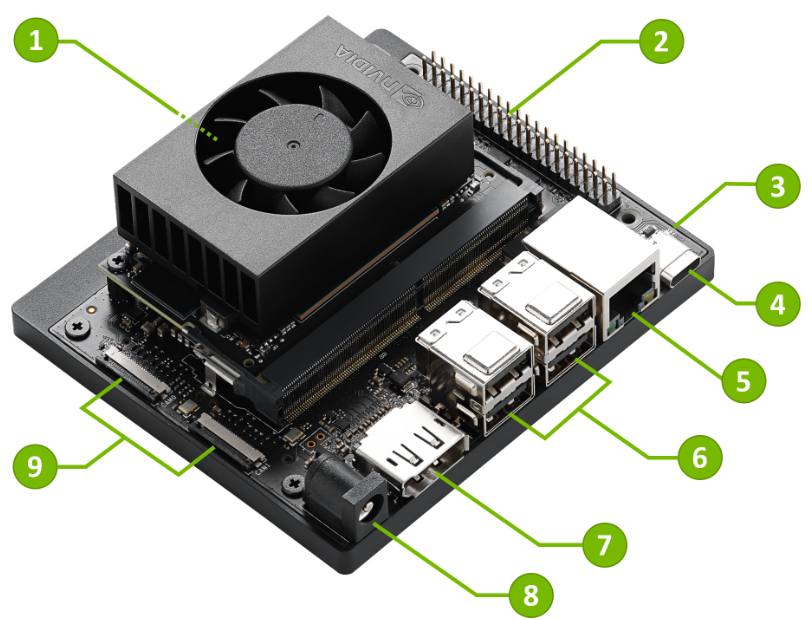

Jetson Orin Nano Super: The Jetson Orin Nano Super – JONaS (my sobriquet, not NVIDIAs) is a single-board computer designed specifically for the generative AI market. This new chip boasts an Ampere architecture GPU with 1,024 CUDA cores and 32 Tensor cores, a hexa-core ARM Cortex-A78AE CPU, and 8 GB of LPDDR5 RAM. Priced at $249, it is nearly half the cost of its predecessor, making it a more affordable and attractive solution for developers and startups.

The module operates at a 25-watt power draw, up from the 15-watt of the previous version, resulting in a performance boost of between 30 and 70%. This improvement is achieved through several critical adjustments, including an increase in memory bandwidth from 64 GB/s to 102 GB/s, enhancing data transfer rates and system efficiency. The CPU frequency has been boosted to 1.7 GHz, providing faster processing for general-purpose computing tasks, while the GPU frequency has been elevated to 1020 MHz, significantly enhancing the module’s ability to handle complex graphical and computational tasks.

JONaS is designed to support a wide range of applications, from autonomous vehicles and robotics to smart cities and edge AI. Its compact size and high performance make it ideal for embedded systems that require robust AI capabilities. For instance, it can enable real-time sensor data processing in self-driving cars, making critical decisions on the fly. It can also be integrated into urban infrastructure to manage traffic flow, monitor public safety, and optimise energy consumption.

The Evolution of AI Chips: The term “Artificial Intelligence” was first introduced at the Dartmouth Conference in 1956, but it wasn’t until the late 20th century that AI began to leverage specific computing hardware. In the 1980s, researchers like Yann LeCun and Geoffrey Hinton made significant strides in neural networks and deep learning algorithms. For example, in 1989, LeCun and his team at Bell Labs developed a neural network that could recognise handwritten zip codes, marking an important real-world application of AI.

Before the adoption of AI-specific chips, general-purpose CPUs were the norm. However, the high-definition video and gaming industries’ need for larger capacity in parallel processing led to the emergence of Graphics Processing Units (GPUs). In 2009, researchers from Stanford University highlighted that modern GPUs had superior computational power compared to multi-core CPUs for deep learning tasks. This realisation led to the widespread use of GPUs in AI applications due to their parallel computing architecture ideal for the large-scale data processing required by AI algorithms.

Today, several types of AI chips are in use, each with unique strengths. GPUs are the cornerstone of AI training and inference due to their efficient parallel processing capabilities. Field-Programmable Gate Arrays (FPGAs) and Application-Specific Integrated Circuits (ASICs) provide versatility and high performance for specific tasks. Additionally, Neural Processing Units (NPUs) or AI accelerators are exclusively designed for neural network processing, offering high performance and low power consumption, making them ideal for edge AI applications where data is processed locally rather than in a cloud server.

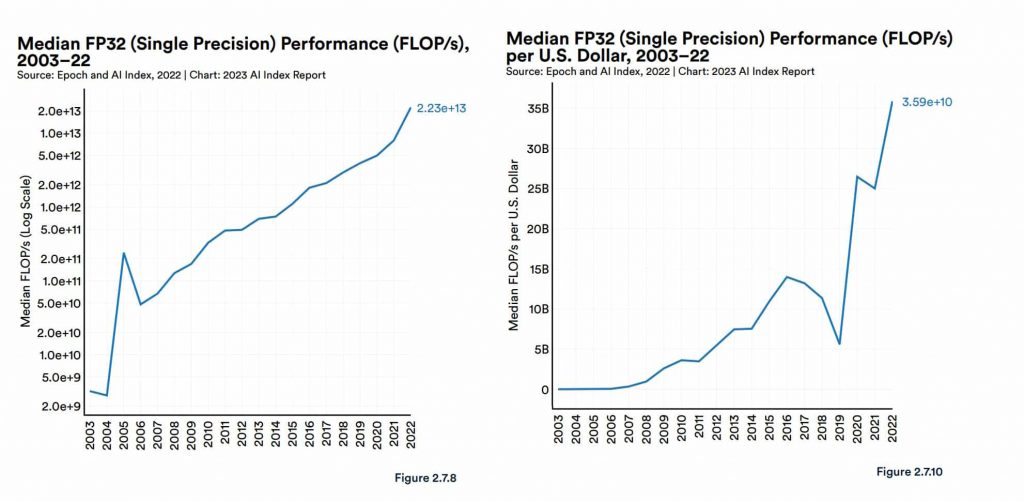

Why Specialized AI Chips Are Indispensable: AI and machine learning (ML) tasks require processing vast amounts of data in parallel, which is beyond the capabilities of traditional CPUs. CPUs are designed for sequential processing, completing one calculation at a time, whereas AI chips like GPUs and NPUs are built for parallel processing, executing numerous calculations simultaneously. This parallel architecture significantly speeds up the processing time for complex AI models.

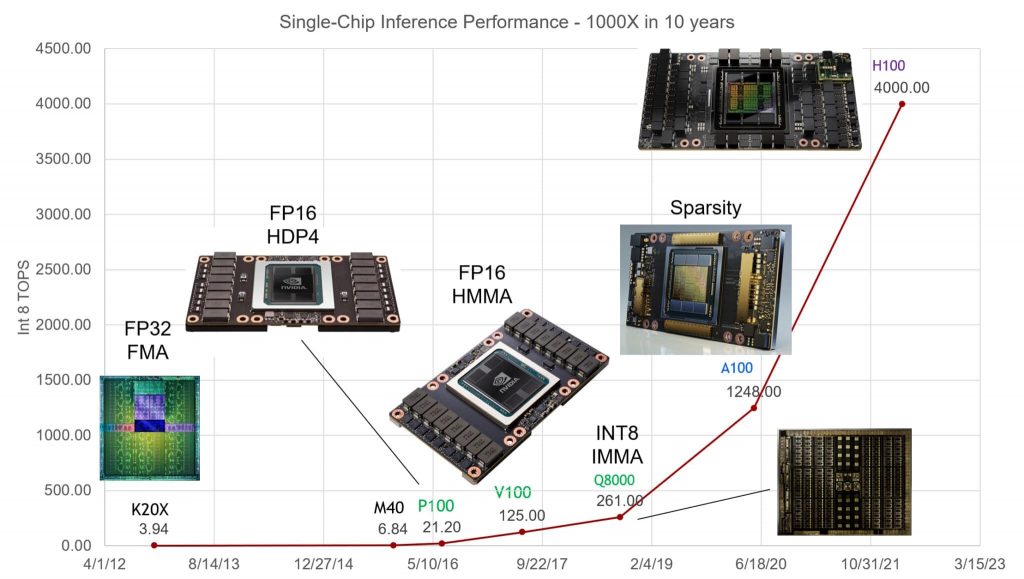

GPUs are also more energy-efficient than CPUs for AI tasks, making them ideal for accelerating machine learning workloads. For example, NVIDIA GPUs have increased performance on AI inference by 1,000 times over the last ten years while reducing energy consumption and total cost of ownership. JONaS is a prime example of this efficiency.

Generative AI models like ChatGPT require thousands of GPUs working in tandem to train and run efficiently. The scalability of GPUs allows the clustering of multiple units to achieve the necessary computational power, which is not feasible with traditional CPUs.

The Future of AI Chips: As AI continues to evolve, the future of AI chips holds both promises and challenges. The current trajectory suggests that AI chips will continue to improve in performance, efficiency, and specialisation. Future advancements will likely focus on further optimising these chips for specific AI tasks, such as natural language processing, computer vision, and predictive analytics.

The integration of AI chips with quantum computing is particularly promising. This is perhaps why Google named its Quantum computing division Quantum AI, akin to a washing powder company naming its product Detergent. Quantum computing has the potential to address the growing computational demands of AI by providing exponential scaling, as we recently wrote in our piece about Google Quantum AI’s chip, Willow. This convergence could revolutionise various sectors, including healthcare, finance, and research, by enabling the processing of vast amounts of data with unprecedented efficiency and accuracy.

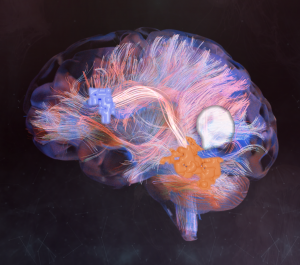

Besides quantum computing, new architectures such as neuromorphic and photonic chips are also being explored. Neuromorphic chips mimic the human brain’s structure and function, potentially offering more efficient and adaptive AI processing. Photonic chips use light instead of electricity to transfer data, which could significantly reduce energy consumption and increase processing speeds.

Advanced chips like NVIDIA’s JONaS are indeed a positive step for the AI industry, but for AI startups struggling with the immediate scarcity of AI chips, these mean little if they sell out before they even go on sale. The rapid acceleration of AI development is straining the supply chain for AI chips like GPUs and specialised accelerators. As chip manufacturers struggle to keep pace with orders, the shortages could cripple AI advancements impacting everything from data centres to consumer devices.

Given that AI is set to permeate every aspect of our lives, the availability of such technology is essential to ensure that all stakeholders – from startups to large enterprises – can drive innovation forward and ultimately benefit the world. The need for greater innovations to bring forth more powerful, efficient, and specialised hardware is, hence, not just a wish but a necessity.

In case you missed:

- Google’s Willow Quantum Chip: Separating Reality from Hype

- AIoT Explained: The Intersection of AI and the Internet of Things

- 2023 Developments That’ll Alter Your Future Forever

- AI vs. Metaverse & Crypto: Has AI hype lived up to expectations

- The Rise of Personal AI Assistants: Jarvis to ‘Agent Smith’

- Why a Quantum Internet Test Under New York Threatens to Change the World

- The Path to AGI is Through AMIs Connected by APIs

- Apple Intelligence – Steve Jobs’ Company Finally Bites the AI Apple

- Is Cloud Computing Headed for Rough Weather

- How Lionsgate-Runway Deal Will Transform Both Cinema & AI