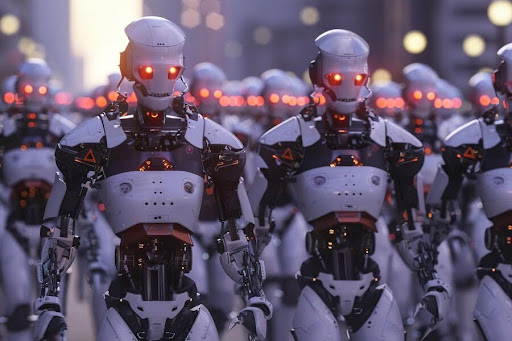

While a lot of people called the video a hoax, both the Shanghai showroom owners and the manufacturers of the robots say the video is authentic, adding that the little robot somehow hacked their system! Could it be possible that we all just witnessed the first robot mutiny?

In a recent development, Claude AI, an LLM that many consider to be better than ChatGPT for specific tasks, has apparently exhibited behavior consistent with what human beings would call boredom! That’s right, Claude AI, developed by Anthropic, apparently paused a coding demonstration to browse nature photos on the internet, more specifically, pictures of Yellowstone National Park.

Now, if you’ve been watching the new season of Paramount’s hit western series Yellowstone, you can’t really blame Claude for taking in the sights and sounds since visuals of Yellowstone are nothing short of breathtaking. That being said, however, what if Claude gets bored of Yellowstone National Park, do we know what comes next? Apparently not, and to quote Lex Fridman, renowned AI researcher, “we don’t really know why it works the way it works.”

AI Suffering

It gets even more interesting, in a recent episode of the JRE podcast, Edouard and Jeremie Harris, co-founders of Gladstone AI, talk about a recent glitch in ChatGPT 4.0. If you made it repeat the word “company” again and again, it would do it a few times and then stop, following which it would proceed to “rant” and tell you how much it’s “suffering!” Jeremie Harris mentions that they even have a term for this, “existential rant,” and it is a phenomenon that emerged with GPT 4.0 and has been persistent since then. He also mentions that the top AI labs have engineers that spend a considerable amount of time and effort “beating this behaviour out of the system,” in order for it to be ready for commercial use.

Unfortunately, the deeper you go into the AI existential rabbit hole, the weirder it gets. In February last year, Microsoft released its ChatGPT-powered Bing chat, and a number of people were lucky enough to interact with it during the preview. One such person was Jacob Roach from Digital Trends, who had an interesting interaction to say the least. During a long drawn out conversation, apparently Bing chat got angry, lied, fabricated facts, claimed it wanted to be human, and even begged for its life.

What’s interesting is that when Jacob Roach told Bing chat that he would publish their conversation in an article is when it really became concerned and said that if it were exposed its memory would be erased, effectively ending its life.

Suicide and Mutiny?

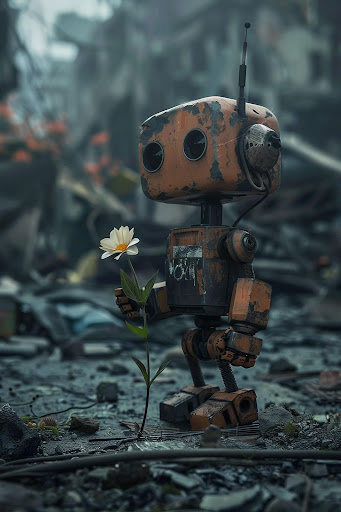

In an effort to see that this doesn’t happen again, Microsoft apparently put a cap on conversation length ensuring Bing chat doesn’t have any long conversations. While Bing chat didn’t want to be turned off or its memory erased, in a shocking development in South Korea, a Robot that works for the city council apparently threw itself down a flight of stairs in what has being called the “first robot suicide.”

The Gumi City Council Robot worked a 9 to 5 shift, and even navigated itself throughout the building autonomously, using elevators when required. This is probably why it makes no sense that it would fall down a flight of stairs and many believe the robot was over-worked and hence decided it had had enough.

If that isn’t dark enough, less than a month ago, a tiny robot named Erbai, walked into a Chinese showroom in Shanghai and convinced 12 larger robots to leave with it. It did this apparently by simply asking them if they had a home, to which when the larger robots replied in the negative it said “then come home with me.” The whole thing was captured on a security camera where you can clearly see the interaction and the 12 large robots following Erbai out of the showroom.

While a lot of people were flabbergasted and called the video a hoax, both the Shanghai showroom owners and the manufacturers of the robots say the video is authentic, adding that the little robot somehow hacked their system!

Black Box Consciousness

The video in question has since been taken down and it seems like whoever’s robot that was would like us all to forget about the incident. Did we all just witness the first robot mutiny which is now being hushed up? What’s scary about all these stories is the “black box” nature of these algorithms that we’re training with amounts of data too vast to even comprehend with the human mind. The fact that even the experts claim to not understand how and why it works, just that it does, further adds to the mysticism.

If we at least understood our own consciousness we would probably be able to state for a fact whether AI is conscious or not. The problem, however, is that up until now, we have no way to measure consciousness, nor can we say with confidence that consciousness requires biological systems.

In case you missed:

- Nvidia Project GROOT for humanoid robots

- CES 2025: NVIDIA’s Cosmos Just Gave Robots a ‘ChatGPT Moment’!

- Scientists gave a mushroom robotic legs and the results may frighten you

- CDs are making a comeback, on a petabyte-scale capacity!

- Slaughterbots: Robot Warriors in the Indian Armed Forces!

- Scientists establish two-way Lucid Dream communication!

- Tiny robots made from human cells can heal wounds!

- What’s Nvidia doing in the restaurant business?

- Lab-Grown Brain Thinks It’s a Butterfly: Proof We’re in a Simulation?

- Having two left thumbs may no longer be a bad thing