The addition of ReALM, with its realistic interaction features and device-independent AI applications, gives rise to a new style of user interface.

Did you ever get to the point where you wondered why your phone was like a stranger to you? You ask for a weather forecast, and it launches a weather app presenting the weather from a different location than the one you took it from. You command your voice AI assistant to ‘turn the brightness up’ and you get a list of random synonyms for ‘bright.’ Such irritating misunderstandings with AI assistants are the most prominent issues. However, what if it were possible to own a phone that could naturally interact with you, just like someone you are close to? Apple’s announcement of ReALM had people asking questions about whether a new era of mobile AI is coming soon that can finally pinpoint the details of our queries and a curiosity about the possibility of a smarter Siri or a more intuitive mobile experience. Read on to see how ReALM could reshape what it means to call.

Therefore, let’s discuss the ReALM and how it could change Siri.

Developed by Apple researchers, ReALM (Reference Resolution as Language Modelling) is a project designed to enhance the quality of voice commands that are interpreted and responded to by Siri. It tackles a common challenge: removing any doubtful references.

Say, you are looking at headlines on your smartphone, and you find a music festival announcement in a news article. You are interested, and you turn to your phone and ask Siri, “Siri, play something from the bands playing at the festival.” Currently, Siri might misunderstand and play tunes from completely different artists. Those gaps can be filled by ReALM, with its examination capabilities. Siri will grasp that “this festival” implies the festival you are reading about and organise a playlist containing the performing bands, thereby making the music discovery process a frustration-free process.

How does ReALM work?

Conventional language resource models, such as GPT-4, make use of extensive databases and cleansing techniques to create answers. Although these systems can include text, images, or audio, they have limitations as regards speed and accuracy, for instance.

The AI processing by Apple’s ReALM model is enhanced as the inputs, which include visual and textual context, are converted to a unified text format instead. This results in process simplicity by eliminating the need for sophisticated image recognition technologies that consume a lot of processing power. Since mobile devices have limited resources, ReALM’s emphasis on text helps the mobile application run smoothly. Moreover, ReALM curbs the problem of “AI hallucinations” (wrong or meaningless information) by limiting the decoding procedure and using improved post-processing methods. The provision allows for AI’s output to be up-to-date and correct, which in turn builds user confidence.

ReALM, which is Apple’s solution to the flaws of current virtual assistants, offers an opportunity to overcome such defects. Its mission is clear: to change the method by which AI understands human language, with a focus on identifying and solving the challenging task of resolving ambiguous references. Here’s how ReALM breaks new ground.

- Understanding Implicit Requests: Suppose you’re texting a friend about a movie that you have watched and say, “That scene when the spaceship was chasing escorts was great!” The current AI assistants might find it difficult to identify the movie you saw. For example, ReALM can review your recent messages or your streaming platform app for movies and ultimately provide additional information, such as reviews and movie trailers. ReALM can use context clues to know that you are talking about the film.

- Smarter Processing for Mobile Devices: In contrast to its cloud-based counterparts, which consume the device’s computing power, ReALM operates right on the chip and provides superb low-latency computer vision. Therefore, selecting input text as the focus makes it very efficient and quick, either for the application on the mobile phone, which has limited resources or for any time real-time action is needed.

- Reducing AI Hallucinations: Not once have we all failed to deal with a case when AI just came up with an irrelevant response. ReALM does this cleverly. Through decoding constraints, it also solidifies the AI’s answer from straying or disconnecting from the actual context. This mitigates the “AI hallucinations,” which consequently establishes a trustworthy and reliable interaction.

- The Power of Contextual Awareness: ReALM is experienced in providing local and cultural background to the audience so that they can easily get immersed in the art. It can tell the difference between what’s on your screen, what you’re chatting about, and even what’s happening just behind. This way, customers feel that they are being cared for as individuals. Just picture yourself attempting to look for a new recipe while checking your grocery list app. ReALM, knowing the context, can provide you with options that address the ingredients which are already available at hand hence, will save you more cooking time.

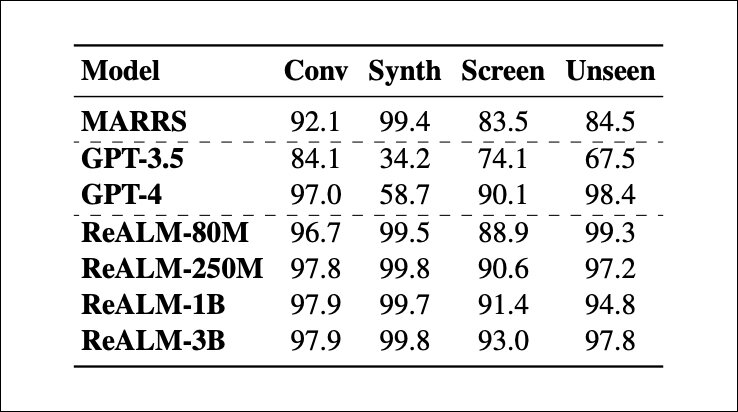

Understanding the Benchmarks: The comparison between ReaLM and GPT-4 and MARRS

This portion summarises the critical points in the ReaLM research paper that are superior to the ones in MARRS (Multimodal Reference Resolution System) and the GPT-4. The lessons will demonstrate exactly how ReaLM deals with unknown situations and does it more intelligently by comprehending domain-specific and complex queries.

The benchmarks reveal a significant advantage for ReaLM: its capability to adjust to completely new conditions that are out of the scope of previous systems. Here’s a closer look:

Suppose you are scheduling an alarm on your phone. Predefined logic systems such as MARRS process only the entities that fall under certain predetermined types like messages and emails. There is this possibility that they might be unable to comprehend what you are saying when you request, “Turn that alarm off in 10 minutes”, because “alarm” is an unfamiliar thing for them. On the other hand, ReaLM employs its linguistic knowledge capabilities. It can comprehend your request (“Turn that off”) together with all the elements on your screen (clock with different alarms is on) and this enables it to resolve the reference and complete the action. This is evident in the benchmarks where alarm detection (new domain) is the testing ground for the ReaLM model – the results show that the ReaLM model is more effective than the one that depends on pre-defined logic.

Beyond Accuracy: Detecting Complex User Requests

Although precision is vital, the knowledge of the ambiguity of user intentions is also important for an attractive user experience. For instance, say you want to dim the lights at home by using a voice assistant. You could say, “Can you make it a little darker?” and the assistant would respond by displaying a brightness slider and information about your smart lights. Similarly, the GPT-4 can identify a single slider for a change in brightness and bring the screen to its brightest level. However, it isn’t something you want to listen to. In contrast to ReaLM, it is designed to meet the necessities of each user and specific areas, such as home automation. Later, it comes to the surface that you tend to use the pronoun it often, and it refers to the smart bulb, not your phone screen, which this phrase applies to. This demonstrates that the ReaLM was created with users in mind, demonstrating their comprehension of home automation.

Siri 2.0? The Future of AI Interaction

The expectation has escalated as rumours are rolling about the prospect of merging Siri with ReALM, which might be the prologue of a whole new age of AI communication, and now, with WWDC just around the corner, a lot of people are thinking about Siri built on ReALM technology. In a future situation, imagine a virtual agent that seamlessly accommodates your virtual reality, solving your needs and bringing appropriate answers. In that case, Siri 2.0 is likely to be the awakening light, with its ability to provide us with new insights and conquer our daily challenges. The addition of ReALM, with its realistic interaction features and device-independent AI applications, gives rise to a new style of user interface.

In case you missed:

- None Found