The debate about how data centre cooling systems should be designed has been raging for decades.

When it comes to the world of data centres, it’s getting hot in here. No, really. Data centres consume copious amounts of power, which gets converted into heat. Since servers don’t really like heat, the need for strong and efficient data centre cooling arises. If we remember the first law of thermodynamics, the energy created cannot be destroyed; instead, it changes form. So, a data centre consuming 1 MW of electricity will transform it to an equivalent amount of heat – which is a lot. And the more electricity a data centre consumes, the greater the challenge of managing the resulting heat.

The debate about how data centre cooling systems should be designed has been raging for decades. With ever-growing workload densities, the question is timelier than ever, especially since data centres consumed 460TWh of energy in 2022, according to an International Energy Agency report. That constituted 2% of all electricity usage worldwide.

However, the fact of the matter is that there’s no one correct way to design efficient data centres. Over the years, cooling technologies have only driven home that point and have evolved to meet changing needs. Here’s a look back at the evolution of data centre cooling systems.

The Early Years: Raised-Floor Cooling

Since the 1970s, most data centres have been cooled using pressurized air from a raised floor or slab. The system called CRAH (Computer Room Air Handler) or CRAC (Computer Room Air Conditioner), cooled air moved by fans whirring at a constant speed. The setup sent compressed air beneath the raised floor before pushing it through perforated tiles to cool the machines. While this system worked fine for low-density computing at that time, there were trade-offs surrounding durability, flexibility, and cost.

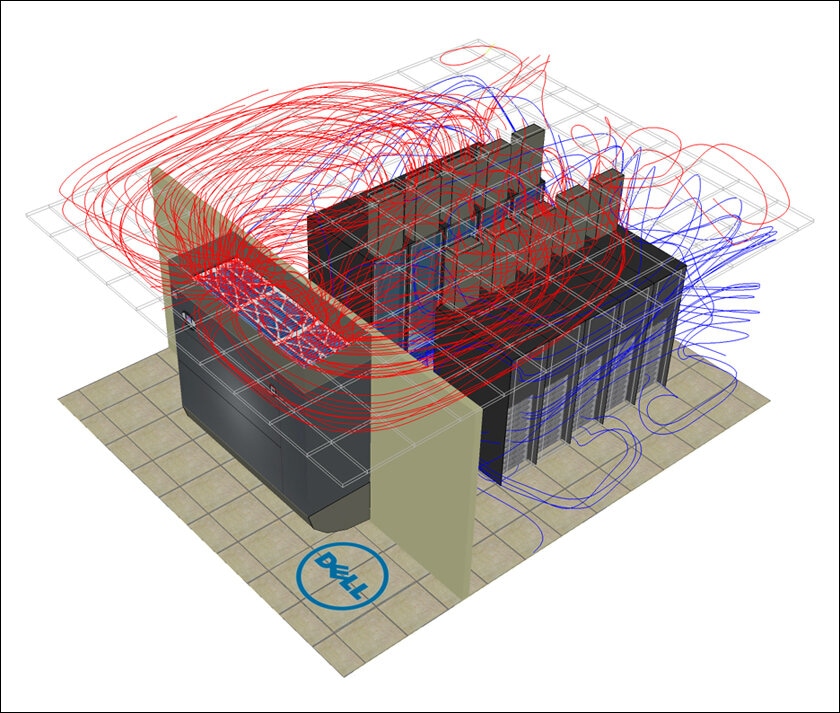

That said, a critical issue with CRAC/CRAH was stratification, which resulted in a temperature gradient from the bottom to the top. So, the servers close to the floor get the coolest air, while those at the top have considerably warmer temperatures. Moreover, increasing airflow to mitigate this issue decreases efficiency due to the cooler air mixing with the hot return air. Consequently, cold aisle/hot aisle layouts were developed as a containment strategy.

Upgrading to fans

The next major idea for the data centre cooling problem was to build cooling systems into the data centre perimeter or the walls of the data hall. So, air cooling fan units were positioned on the perimeter wall to distribute the cooling across the data hall. These variable-speed fans would allow data centre operators to control energy consumption and temperatures with much greater precision, as the energy consumed by the fans was significantly lower than in constant-speed fan systems. At that time, the fan wall approach was looked at as a new technology as it addressed issues like maintenance costs and noise while increasing efficiency. However, their long-term potential and durability are still very arguable.

The Others: Acs, Chilling Mats, and more

Some professional data centre setups even have air conditioning systems as the most prevalent means of cooling their setups. The system is akin to residential air conditioners, circulating the server airflow through an air conditioner, cooling it via a radiator grille, and recirculating it into the servers. The next most widely- adopted cooling system is chillers, which, unlike air conditioners, employ water-based solutions to transfer the heat. While air conditioning is usually more affordable and simpler, chilled water systems are more energy-efficient. However, they are more complex and component-driven in their design and maintenance.

Many data centres also use adiabatic mats and chambers, where water is poured and evaporated on them. So, the mats and chambers cool down, along with the air inside. Even though it’s viable, it’s considered a somewhat exotic solution.

The Future: Water, Liquid, and Immersion Cooling Systems

While air-cooling efficiency continues to grow, water-cooling systems are also increasingly popular. In fact, data centres have been built underwater amidst seas, fjords, and whatnot. Water cooling systems use strategically placed water pipes in server rooms, one for cold water inflow and another for hot water outflow. Moreover, the radiators on heat-generating GPUs, CPUs, and other equipment are linked directly to this system. So, the approach cools the data centre, the premises, and the equipment and generates warm water for additional uses.

The future of data centre cooling is evolving around liquid and immersion cooling, with many technologies available to meet this challenge. These include direct-to-chip technologies and rear-door heat exchange, both of which are growing in popularity. Even though immersion cooling is in the early-adopter phase, the technology is expected to go mainstream in the next four years. So, while the advent of liquid cooling is already upon us, the rollout isn’t going to happen overnight. While it’s hard to imagine that the future of cooling is so undecided and open even after decades of data centres have been around, the fact is that the fundamental physics of it hasn’t changed, even if the methods vary greatly. What’s important is that data centre designers and operators maintain flexibility by making the facilities interchangeable between air and liquid-cooled systems. This ensures that liquid cooling can be deployed quickly without requiring extensive retrofitting to the existing setup.

In case you missed:

- Green Data Centres: Future-ready for Sustainable Digital Transformation

- The World’s Craziest Data Centres

- Colocation Data Centres: An Overview

- Technology for good – How IoT is driving sustainability

- IoT and its role in energy transition

- Everything you need to know about DaaS (Desktop as a Service)

- Star Topology – Benefits and Challenges

- Everything you need to know about Digital Asset Management

- Wildlife Conservation: Is AI Changing It For The Better?

- Why you should integrate disparate business systems: 5 key reasons