Helping people overcome disabilities is probably one of the most noble causes you could use AI to tackle. In this post, we look at AI-powered glasses for the blind, as well as AI-powered gloves, and throat patches for the hearing and speech impaired.

While the internet is flooded with stories about AI doing everything from writing code to making music, helping people overcome disabilities is probably one of the most noble causes you could use AI to tackle. Not every blind person has access to a seeing-eye dog, and a person who is deaf or hearing impaired doesn’t always have access to someone who knows sign language. That being said, owning a device that could guide you with sounds would be invaluable to someone who is blind or has low vision. Similarly, owning a device that converts what someone is saying into text in real time would without a doubt increase the quality of life for people with hearing disabilities. An interesting side note, while many people with hearing disabilities excel at lip reading, only about 30% of speech sounds can be lip-read.

(B) The standing task requires the participant to use the provided wearable device to search and reach for a target item situated among multiple distractor items (bottles). (Image Credit: PLOS)

Acoustic-Touch Smart glasses for the blind and vision impaired

Researchers from the University of Technology Sydney, the University of Sydney, and ARIA (Augmented Reality in Audio) Research, have developed a pair of glasses that can help the blind navigate using sounds and spatial audio. The technology in use here is being referred to as “acoustic touch” which claims to help people see with the help of sound cues. According to Robert Yearsley, CEO, and co-founder of ARIA Research, it does this with a computer that’s small enough to mount on a pair of glasses, coupled with AI and machine vision which maps a room and then helps you navigate around it with the use of spatial sounds that are uniquely related to the item, object, or obstacle they represent.

For example, if you’re looking for your keys, it will emit a sound (probably like keys jangling) toward the direction of your keys, so you can reach out and grab them. Similarly, leaves or trees are represented by a rustling sound, and mobile phones, by a buzzing sound. While the glasses are still in the prototype stages, the highlight of the story here is that Yearsley has employed people who are blind and vision impaired as subject matter experts to help fine-tune and develop the product into what it needs to be. The device was tested on 14 participants and a study was conducted on the efficacy of the device, the results of which were positive (in enhancing the ability of blind people to recognize objects) and were published in the journal PLOS ONE.

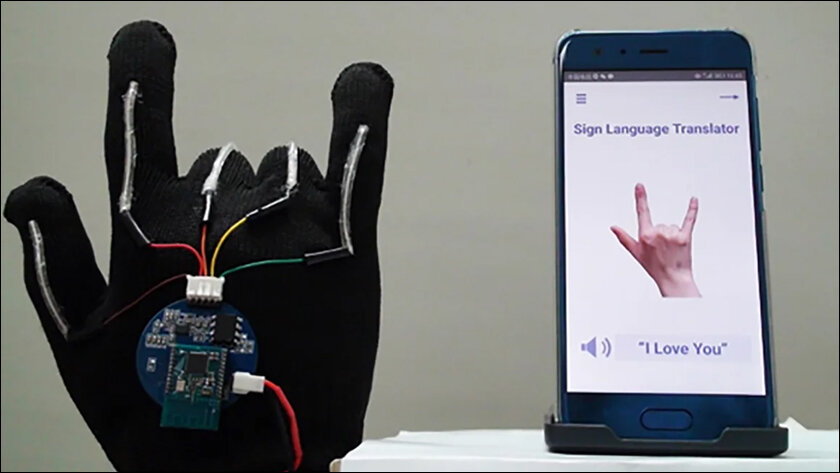

AI-powered gloves and an AI-powered throat patch

Fifth Sense by Pune-based startup GlovatriX, is an AI-powered “glove/smartwatch” with sensors that use machine learning to map and learn sign language gestures. These gestures are then translated into speech in real time. It also works the other way around with a mic that records speech and translates it into images displayed on the smartwatch part of the glove. According to Glovatrix cofounder Aishwarya Karnataki, they have achieved a 98% accuracy for over 50 different gestures in sign language. She then explains how it takes about ten recordings of ten different individuals for the machine to learn a new gesture and that they can add about 100 new gestures each week. The device is expected to cost about Rs.20,000.

A team of engineers from UCLA has invented an AI-powered throat patch about a square inch in size and 0.06 inches thick. This tiny device (sticker) is built for people with damaged or dysfunctional vocal cords. To use it, you just stick it on your throat and this self-powered device uses magnets to detect movement in the larynx muscles, which are then converted to electronic signals and speech. The machine learning algorithm works by collecting data relating to the way people move their throat muscles while they speak and then using that data to correlate throat muscle movements with words. According to UCLA, the throat patch can accurately predict what someone is trying to say by reading their throat muscles to an accuracy of about 94.8% which is not bad.

There is hope for us after all

Other noble efforts to improve the lives of people with disabilities include speech-to-subtitle glasses for the deaf, glasses to help the colorblind see colors better, and smart toys for children with learning disabilities. In conclusion, while human beings have historically been known to take every major technological breakthrough and turn it into a weapon of some sort, these researchers are using AI and Machine Learning to address the unfair nature of life and the plight of people who have been unlucky or less fortunate. With everything that’s been going on in the world today, it’s refreshing to see that there are still people striving and dedicating their lives to something as meaningful as improving the quality of life of people with disabilities.

In case you missed:

- Neuralink Blindsight and Gennaris Bionic eye, the future of ophthalmology?

- These AI powered devices add smells to virtual worlds

- Could Contact Lenses be the Key to Fully Wearable BCIs?

- Samsung’s new Android XR Headset all set to crush Apple’s Vision Pro

- What’s Nvidia doing in the restaurant business?

- CES 2025: NVIDIA’s Cosmos Just Gave Robots a ‘ChatGPT Moment’!

- Omnidirectional VR treadmills, go anywhere without going anywhere!

- X’s Trend Genius: Social Media Psychic or Just Another Algorithm?

- Could the Future of Communication Be Holographic?

- Nvidia Project GROOT for humanoid robots